Agentic AI for next-gen cybersecurity operations

Cyber threats are escalating in volume and sophistication, costing enterprises an average of $4.88 million per breach in 2024. Traditional security operations are overwhelmed—security teams face over 4,484 alerts per day, with 67% left unreviewed and many dismissed as false positives. This overload, coupled with talent shortages and complex threat vectors, has exposed the limitations of static security tools.

Agentic AI offers a transformative solution. Unlike basic automation, agentic AI systems are autonomous, adaptive, and capable of making real-time security decisions across detection, investigation, and response workflows. These agents learn continuously, reduce alert fatigue, and drive intelligent prioritization, allowing SecOps teams to manage growing security workloads without proportional increases in headcount. Organizations deploying agentic AI report up to 50% reduction in alert volume in average cost savings per breach. Agentic AI is rapidly shaping the future of cyber resilience, redefining how enterprise SOCs detect, respond to, and adapt to threats.

In this article, we unpack the architecture, capabilities, and strategic role of agentic AI in cybersecurity, along with how LeewayHertz operationalizes this paradigm to enable adaptive, enterprise-ready defense.

- Core capabilities of agentic AI in cyber defense

- Key cybersecurity domains empowered by agentic AI

- Architectural patterns of agentic AI for cybersecurity

- Benefits of agentic AI for security teams

- Challenges and guardrails

- How LeewayHertz integrates AI and cybersecurity to build enterprise-ready solutions

- ZBrain Builder’s approach to agentic AI in cybersecurity

- How LeewayHertz helps in solving cybersecurity use cases with ZBrain AI agents

Core capabilities of agentic AI in cyber defense

Effective agentic AI for cyber defense exhibits several core capabilities that enable it to tackle complex security challenges autonomously:

- Autonomous reasoning – The AI agent can make independent decisions with minimal human intervention. It analyzes data, evaluates risks, and decides on actions on its own. This means the agent can, for example, notice an unusual login attempt and decide to flag or block it in real-time without waiting for a person’s judgment. Autonomous reasoning allows these agents to assess situations and execute security tasks end-to-end.

- Multi-step execution – Agentic AI can perform complex, multi-step workflows through chain-of-thought reasoning. Rather than one-and-done rules, the agent breaks down tasks into subtasks and plans a sequence of actions. For instance, an agent might detect a malware infection, then systematically flag the host to isolate it, scan other systems for indicators of compromise, and finally generate a remediation plan. This ability to reason through multi-step processes (often using frameworks like ReAct, which interleaves reasoning and acting steps) lets the AI handle sophisticated incident response or investigation procedures that involve several decisions in a row.

- Tool use and integration – Unlike a monolithic system, an agentic AI can invoke tools, APIs, and external systems as part of its workflow. It might query a threat intelligence database, run a script on a firewall, or pull data from a knowledge base on the fly. In practice, this is enabled by defining actions the agent can take (e.g., API calls, database queries) within its environment. Security AI agents integrate with existing security infrastructure – SIEMs (Security Information and Event Management), ticketing systems, firewalls, etc. – so they can take direct action or gather information as needed. Tool use is critical in cyber defense; an agent might automatically run a vulnerability scanner or trigger a SOAR playbook step as part of its reasoning process.

- Memory – Agentic AI systems maintain memory of prior interactions and observations. They remember context from previous steps or past incidents and use it to inform future decisions. For example, if an agent analyzed an alert an hour ago, it can recall that analysis when a related alert appears, avoiding redundant work. Memory can be short-term (within a single multi-step run) and long-term (learning from historical incidents). Techniques such as episodic memory storage and stateful orchestration frameworks enable agents to carry forward facts or conclusions across an ongoing session. This continuity is crucial in cybersecurity, where understanding the sequence of events (kill chain) or the history of an asset’s behavior can make detection and response far more effective.

- Self-improvement – Because they incorporate AI/ML models, these agents continuously learn from new data and outcomes. Through feedback loops, an agentic AI can refine its detection models or decision policies over time. This might involve reinforcement learning (learning from trial-and-error rewards with human intervention) to improve decisions, or updating its machine learning models as it ingests more security telemetry. The result is an AI system that gets smarter and more precise – for instance, reducing false alarms as it learns what benign activity looks like in a particular environment. Self-improvement means today’s AI SOC analyst agent can become markedly more effective a few months down the line after encountering dozens of new attack scenarios and incorporating that experience.

- Policy alignment – Autonomy must be bounded by the organization’s policies and ethics. A strong agentic AI is designed for alignment with security policies, compliance requirements, and human intent. In practice, this means the agent has guardrails: it won’t take forbidden actions, and it respects rules (like privacy constraints or escalation procedures). For example, an agent might autonomously flag a user password reset notification if suspicious activity is detected, but it would not delete user data or shut down systems unless explicitly allowed by policy. Ensuring policy alignment often requires building in approval steps or validations so that the AI’s independence doesn’t lead to unintended consequences. Enterprises also enforce this through governance frameworks – e.g., the agent might check a planned action against an allowlist/denylist or require a human manager to approve certain high-impact responses. Alignment is a key capability because an AI agent moving at machine speed could cause damage if misaligned; thus, the best agentic systems combine autonomy with mechanisms to stay within the bounds of organizational and ethical norms.

Key cybersecurity domains empowered by agentic AI

Agentic AI can enhance a wide range of cybersecurity functions. Here are key domains in which these autonomous agents are making a significant impact:

Threat detection and monitoring

AI agents excel at continuously monitoring networks, endpoints, and user activity to catch threats that humans might miss. They can ingest massive volumes of telemetry data in real time and use anomaly detection to flag subtle signs of intrusion. For example, an agentic system in a Security Operations Center (SOC) watches streams of log data 24/7 and identifies patterns indicative of malware or an active attacker – even if those patterns don’t match any known signature. By correlating data across sources and applying machine learning techniques, agents can detect advanced attacks (like low-and-slow insider attacks or multi-stage exploits) that static rules would overlook. In practice, organizations use such agents for tasks like continuous SIEM monitoring, user behavior analytics, and real-time alerting. The agent’s autonomy helps ensure no gap in coverage – it never gets tired or overwhelmed – and it can respond the instant it sees something malicious.

Incident response and remediation

When a security incident occurs, agentic AI can dramatically speed up containment and recovery. These agents automatically perform the investigation steps a human responder would – gathering evidence, determining scope, and even executing mitigation – but at machine speed. For instance, upon detecting a ransomware outbreak, an AI agent might isolate affected machines, block malicious IP addresses, and initiate backup restoration quickly, limiting damage before an analyst even gets involved. Agentic AI can also coordinate complex response playbooks: one agent could disable a compromised user account while another pushes a firewall rule update to cut off C2 traffic. By automating these responses, organizations see much lower mean time to contain and recover incidents. It’s important to note that well-designed AI incident responders still work under oversight – e.g., critical actions might require human approval or follow predefined safe response policies – but they handle the bulk of steps autonomously. This frees up human responders to focus on strategy and edge cases instead of routine containment steps.

Vulnerability management

Autonomous agents significantly improve how organizations find and fix vulnerabilities. Rather than occasional scans, an AI agent can continuously analyze code, configurations, and threat intelligence to identify emerging vulnerabilities or misconfigurations. Some agents act like automated penetration testers – proactively probing systems for weaknesses or simulating attacker behavior to discover exploitable flaws. Others ingest feeds of new CVEs (Common Vulnerabilities and Exposures) and cross-reference against your asset inventory to pinpoint which systems are at risk, then even prioritize them by calculating potential impact. Beyond detection, agentic AI can help with remediation: for example, generating a patch or configuration fix and applying it. There are already examples of AI agents that not only flag a vulnerability but also generate the code to remediate it and open a ticket for developers with instructions. By introducing this level of automation, organizations move toward real-time vulnerability management – reducing the window of exposure between a vulnerability being discovered and being resolved.

Identity and access management (IAM)

Agentic AI assists in monitoring and protecting user access, a critical area often exploited via phishing or misuse of credentials. AI agents can continuously watch for anomalous login patterns, privilege escalations, or abnormal resource access that might indicate an account takeover or insider abuse. For example, if an employee’s account suddenly starts accessing large amounts of sensitive data at odd hours, an agent can flag it or automatically trigger an action (like a step-up authentication challenge or session termination). In modern zero-trust architectures, some organizations employ AI agents to dynamically adjust access rights – granting or revoking privileges on the fly based on real-time risk assessments. A very concrete use-case is using agentic AI to fight phishing: if an agent detects signs of a successful phishing attack (say, an OAuth token was illicitly granted to a malicious app), it can immediately revoke that access, force a password reset, and log the user out of all sessions. By acting faster than any human, the AI potentially prevents an attacker using stolen credentials from causing damage. Overall, agentic AI strengthens IAM by enforcing policies continuously and responding to identity-related threats (compromised accounts, permission abuse) in an autonomous yet precise manner.

Data protection and exfiltration monitoring

Modern enterprises generate oceans of data, and keeping that data from leaking is a huge challenge. Agentic AI offers a way to monitor data flows and user behaviors to detect potential data exfiltration or misuse. For instance, an agent might observe patterns of database queries or file transfers and notice when something deviates from normal – such as a user downloading an unusual volume of data or accessing files outside their usual scope. The agent can then alert or intervene (maybe quarantining the data or locking the user’s access) to stop a breach in progress. Unlike static DLP (data loss prevention) rules, an AI agent can adapt to context: it could distinguish between a legitimate large data export for a report versus a suspicious bulk data dump by correlating with user roles, past behavior, and content sensitivity. Additionally, if an incident does occur, agentic AI can help with forensics on data usage – automatically compiling which records were accessed by a malicious process, or tracing the path of an exfiltrated file across systems. By reasoning over file logs, network flows, and user actions collectively, AI agents provide a more intelligent shield around sensitive information, catching stealthy exfiltration attempts that humans might overlook.

Compliance and audit readiness

Ensuring continuous compliance with security frameworks (like SOC 2, ISO 27001) and passing audits is tedious but crucial. Agentic AI can act as an ever-vigilant compliance auditor that continuously checks systems against compliance requirements and security policies. Instead of doing compliance checks once a quarter, an AI agent can, for example, daily verify that all systems have proper logging enabled, required controls are in place, and no configurations drift from policy. If it finds an issue – say an S3 bucket becomes publicly readable or an audit log is turned off – the agent can immediately flag it or even remediate it (perhaps re-enabling the log and notifying the team). These agents understand not just hard technical rules but also the intent behind standards, and they map that to the environment to spot violations in real-time. They then produce reports or even create tickets for each compliance gap they find. The benefit is continuous audit readiness – minimizing unexpected complications at audit time because the AI has been catching and helping fix issues all along. Moreover, everything the agent does can be logged and justified, so there is an evidence trail for auditors showing how compliance is being enforced. By weaving agentic AI into compliance, organizations can maintain a stronger security posture and prove it instantly.

Risk assessment and policy enforcement

Security is not just about reacting to threats, but also proactively assessing risk and enforcing policies to reduce exposure. Agentic AI can serve as a dynamic risk advisor and enforcer. For instance, agents can continuously evaluate risk scores for business assets or business processes by analyzing vulnerabilities, threat intelligence, and business impact data – far faster than periodic manual risk reviews. If the risk level for a system spikes, an AI agent could recommend or implement policy changes, like tightening network segmentation or requiring additional authentication. Agents can also enforce security policies in real-time: think of a policy that no sensitive data should be emailed in plaintext – an AI agent could intercept email traffic, detect a violation (via NLP content scanning), and block or encrypt that message. Similarly, agents might leverage frameworks like OPA (Open Policy Agent) to check any intended action (retrieving certain data, etc.) against a set of security policies before allowing it. The agent essentially becomes a policy gatekeeper that autonomously says “yes” or “no” (or routes for approval) based on compliance with security rules. This ensures that as conditions change, policies are consistently applied without relying solely on human intervention. In sum, agentic AI can make risk management more continuous and granular, and policy enforcement more uniform – reducing the chances of human error or oversight creating an opening for attackers.

Security reporting and forensics

After an incident (or on a regular basis), producing analysis and reports is another area where agentic AI shines. AI agents can automatically gather evidence and document the timeline and details of security events, saving analysts huge amounts of time. For example, if a security alert turns out to be a real incident, an agent could compile all related logs, chat messages, and system states into a coherent incident report. Prophet Security describes an AI SOC agent that, after investigating an alert, drafts a detailed incident summary including findings and risk assessments. These agents provide structured conclusions – formatted notes, clear risk ratings, and even recommended next steps – whereas a human might take hours to write up the same details. In threat forensics, an agentic AI might automatically trace an attacker’s footprint across systems (which accounts they used, what malware files were dropped, etc.) and produce a forensic timeline. By connecting the dots across disparate data sources and narrating what happened, the AI agent can generate reports that are useful for both technical responders and executive summaries. This not only reduces manual reporting burden but also ensures consistency and thoroughness. Some platforms note that their AI can generate these incident reports or summaries instantly when an alert is confirmed, which means stakeholders get faster insight into what happened and how to respond. Overall, agentic AI helps turn raw security data into actionable knowledge, seamlessly bridging into the documentation and lessons-learned phase of cybersecurity.

Architectural patterns of agentic AI for cybersecurity

Deploying agentic AI in cybersecurity isn’t a one-size approach; several architectural patterns have emerged to orchestrate how these AI agents reason and act. Below are key patterns and components commonly used to build robust agentic AI solutions in security:

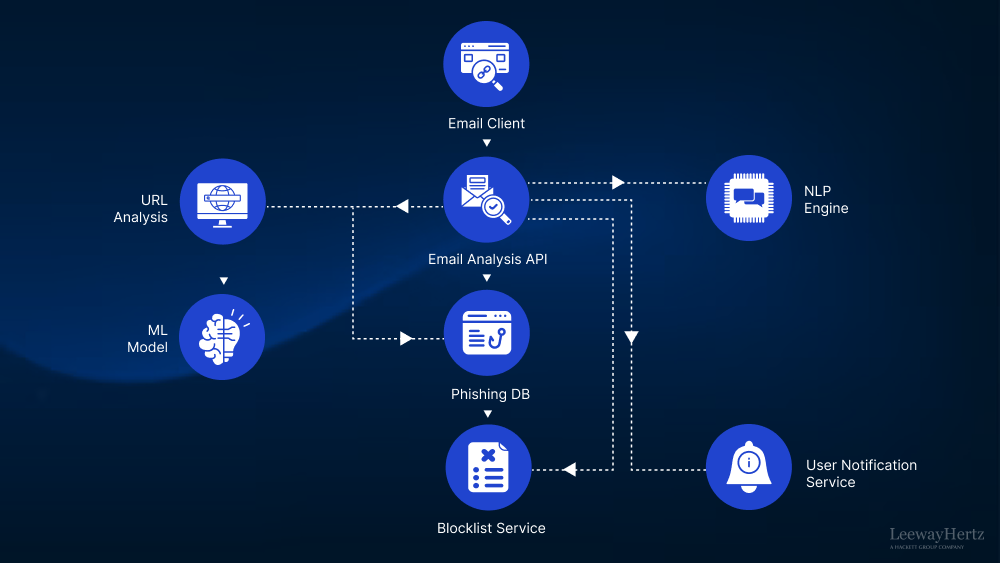

Orchestrated agents vs. single agents

In some cases, a single autonomous agent can handle a task, but often you orchestrate multiple agents and tools in a workflow. An agentic workflow means the process flow (the sequence of steps, branching logic, etc.) is explicitly defined or controlled by a coordinator, rather than leaving all decisions to one agent’s black-box reasoning. For example, a phishing response workflow might have a defined first step to isolate the email, a second step to query an database, third step to either alert or auto-remediate based on results. Each step could be handled by agents, but the overall progression is orchestrated.

Orchestrated agents benefit from explicit decision nodes – points where you can enforce checks or logic (like “if data exfiltration risk > X, then require manager approval”) rather than the agent deciding everything internally. This pattern provides more predictability and traceability, since the flow is structured. In contrast, a standalone agent might dynamically decide its next action via its own reasoning (more flexibility, but harder to govern). Many enterprise solutions use a hybrid method: orchestrated frameworks to enforce critical control points, combined with agentic reasoning within certain steps for flexibility.

Multi-agent crews (Teams of agents)

Complex security challenges may be handled by a crew of specialized agents working together, instead of one monolithic agent. In this pattern, you might have a supervisor agent that breaks down a task and delegates subtasks to worker agents with specific expertise. For instance, in an alert triage scenario, a supervisor agent could assign one agent to gather log data, another to check threat intelligence sources, and another to summarize findings, then compile the results.

Some agent orchestration platforms, such as ZBrain Builder (discussed later), implement this pattern in practice. It uses this “Agent Crew” concept, where you can define multiple agents and their roles in a coordinated fashion. The agents in a crew can run in parallel or sequence depending on dependencies, and they communicate their results back to the orchestrator agent. The benefit of multi-agent teams is modularity and specialization – each agent can be simpler (focused on one task like network scan or explanation writing) and the system can tackle multi-faceted problems more efficiently. Multi-agent collaboration patterns have to manage inter-agent communication and state sharing. Typically, a shared memory or context is used so that agents can build on each other’s work (e.g. the summary agent sees the data that the log-gatherer agent collected). This crew approach maps well to security operations where a “divide-and-conquer” strategy can speed up analysis. It does require robust orchestration to handle if one agent fails or when to merge results, but when done right it enables concurrent, specialized processing that greatly accelerates complex workflows.

Reasoning loops (ReAct pattern)

One notable architecture for agentic AI is the ReAct loop (short for Reasoning and Acting). In this pattern, an agent iteratively alternates between thinking (reasoning) and doing (acting) in a loop until it converges on a solution. For example, an AI might start by thinking: “This alert looks like a possible port scan; I should gather more info.” Then act: call a tool to fetch recent firewall logs. Then observe the result and think again: “The logs confirm repeated sequential port access; next I should check if any host responded with data.” Then again, execute a query on an IPS (Intrusion Prevention System) or endpoint logs, and so on. The ReAct paradigm explicitly prompts the AI (especially LLM-based agents) to generate a chain-of-thought, decide an action, execute it, then use the outcome to inform the next thought. This loop continues until the agent arrives at an answer or completes the task.

In cybersecurity, reasoning loops are extremely useful for investigative tasks – the agent can dynamically decide which tools or data to pull based on what it finds step-by-step, much like a human analyst formulating and testing hypotheses. The ReAct approach was introduced in research to improve how LLMs solve problems by not separating thinking from doing. It has since been adopted in many agent frameworks because it allows adaptive, open-ended problem solving without hardcoding every step. The agent essentially writes its own playbook on the fly. However, one must monitor these loops because an agent could get stuck or go off-track; thus often a maximum iterations or sanity-check mechanism is in place. Overall, reasoning loops give agentic AI the powerful ability to handle situations with unknown steps by iteratively figuring it out, which is very aligned with the trial-and-error nature of solving novel security incidents.

Decision nodes and approval gates

Inserting decision nodes and approval gates into agent workflows is a critical pattern for safety and governance. A decision node is a point in an automated flow where some logic determines the next path – for example, a node could automatically decide “malware detected = yes, proceed to quarantine step; if no, end the flow.” These are commonplace in traditional orchestrated playbooks, and they remain important in agentic AI systems to ensure transparent logic and checks. More importantly, approval gates (human-in-the-loop checkpoints) are used when certain actions are high-risk or when the AI’s confidence is low. At an approval gate, the agent must get a human’s review or sign-off before continuing. For instance, an agent might draft an incident remediation plan but pause for a security engineer to approve it if it involves shutting down a production server.

Optimize Your Operations With AI Agents

Optimize your workflows with ZBrain AI agents that automate tasks and empower smarter, data-driven decisions.

Platforms like ZBrain Builder allow designers to place such human review steps inside an agent crew’s flow. This ensures that although the agent can do the heavy lifting, it doesn’t unilaterally make potentially disruptive decisions without oversight. Approval gates can also be triggered dynamically – e.g. if an agent’s anomaly score is below a threshold or it flags uncertainty, it can route the decision to a human. In summary, integrating decision nodes and HITL (Human-in-the-loop) approval steps combine the speed of AI with the prudence of human judgment. It’s a pattern that acknowledges while autonomy is great, there must be controlled hand-offs in a well-governed security process. By designing flows with these checkpoints, organizations can prevent “runaway” agents and make the behavior of agentic systems more reliable and aligned with operator expectations.

Graph-based workflows and LangGraph

As agentic systems scale in complexity, representing the logic and memory as a graph becomes useful. Graph-based workflows allow branching, merging, and looping paths that aren’t just linear sequences – much like a flowchart. Each node in the graph could be an agent invocation, a tool action, or a conditional decision, and edges define the possible transitions. This graph structure is well-suited to model the often complex logic of cybersecurity operations (e.g., multiple investigative paths that later converge). Tools like LangGraph enable designing these workflows where the state can pass along the graph edges, giving agents memory of past node outputs. LangGraph, in particular, allows agents in a crew to maintain and share context, effectively building a stateful reasoning graph.

In practice, this means one can design a security playbook as a directed graph of nodes: some nodes call LLM-based agents (which themselves might do ReAct loops internally), other nodes call external APIs, and the graph handles how data flows and under what conditions each node executes. The graph approach makes complex flows easier to visualize and control, and it inherently supports parallel branches (for example, trigger two investigation paths at once and then join results). Additionally, representing knowledge (enterprise data, threat intelligence) as a graph can be leveraged by agents – they can traverse knowledge graphs to infer connections during reasoning. Overall, graph-based orchestration gives a robust framework for coordinating multi-agent and multi-step processes, providing the backbone on which agentic AI can be deployed in large-scale, production-grade cybersecurity workflows. It complements the agents’ intelligence with structured flow control and memory passing, merging AI flexibility with software engineering rigor.

Benefits of agentic AI for security teams

Adopting agentic AI in cybersecurity offers numerous benefits that directly address pain points of modern security operations. Key advantages include:

- Speed and scale of response – Agentic AI dramatically accelerates detection and response actions. By operating at machine speed, AI agents can recognize a threat and contain it instantly, far faster than any human. This speed translates to lower Mean Time to Detect/Respond (MTTD/MTTR), limiting attacker dwell time. At the same time, agents provide scalable coverage across your environment – they don’t slow down as the network grows or more alerts come in. An AI agent can monitor thousands of endpoints or logs in parallel, scaling protection seamlessly with the environment. This combination of speed and scalability means security teams can effectively cover much more ground, much faster. Instead of needing one analyst per console or per 100 alerts, an AI agent can handle the first pass for virtually unlimited sources concurrently. The result is a security operation that keeps pace with the volume and velocity of modern threats.

- Reduced alert fatigue – Security teams often drown in false or low-priority alerts. Agentic AI helps cut through the noise by intelligently filtering, correlating, and prioritizing alerts. Rather than a human manually sifting a queue, an AI agent can analyze incoming events in context – merging related alerts and dismissing those that are likely benign. By looking at the whole picture of activity across users and systems, AI agents filter out noise and highlight truly meaningful threats, drastically reducing how many alerts analysts must chase. For example, if multiple low-severity events together indicate a serious attack chain, the agent will raise the priority, or conversely suppress dozens of trivial alerts that stem from a single benign cause. This reduces the cognitive load on security engineers and prevents important warnings from being lost in a sea of minor notifications. Organizations that deploy AI agents for alert triage often report dramatically fewer alerts reaching human analysts, with only the high-fidelity, contextualized incidents being escalated. In short, agentic AI serves as an ever-vigilant level-1 analyst that never tires of the monotony, ensuring human experts can focus attention where it truly matters.

- Contextual and intelligent decision-making – Unlike simple automation scripts, AI agents make decisions with a rich understanding of context. They consider multiple data sources and past learnings when deciding how to handle a threat. This leads to more accurate and nuanced decision-making. For instance, an agent can weigh user behavior history, device security posture, network traffic patterns, and threat intelligence together before deciding an alert is malicious. By using behavioral baselines and pattern recognition, agentic AI detects subtle anomalies or multi-step attacks that static, context-less rules miss. The agent’s responses are also context-aware – for example, quarantining a server only if it’s truly exhibiting dangerous behavior, thus avoiding unnecessary disruption. In essence, agentic AI brings a form of judgment to automated decisions, driven by analytics on diverse data. Security teams benefit from fewer false positives and smarter prioritization, as the AI’s decisions factor in the bigger picture. Moreover, agents can incorporate threat intelligence and historical incident knowledge on the fly, so decisions are informed by the latest knowledge of attacker tactics. This context-driven intelligence means agentic AI not only works faster than humans, but often makes more informed decisions, leading to better security outcomes.

- Continuous learning and adaptation – Cyber adversaries constantly evolve their tactics, but agentic AI systems continuously learn and adapt in response. These agents improve with every new piece of data and every outcome, using techniques like reinforcement learning to refine their behavior. As a result, an AI agent might start by catching known threats, but over time, it identifies novel attack patterns because it has learned what normal looks like and detects deviations. This adaptive defense is a huge benefit over static systems. The continuous learning also applies to reducing errors – if an agent incorrectly flagged something and got feedback, it will adjust to avoid that mistake in the future. Over months and years, the AI becomes extremely tailored to the organization’s environment. It develops an intuition for the network’s patterns, much like an experienced analyst would after long tenure, except the AI’s experience accumulates much faster and across more dimensions. This ever-improving capability means the value of agentic AI compounds over time, giving security teams increasing returns as the system hones itself.

- Reduced workload and enhanced focus for humans – By taking over many of the routine and time-consuming tasks, agentic AI effectively augments the security team, acting as tireless junior analysts or responders. Mundane tasks like log parsing, initial triage, and compiling incident reports can be fully offloaded to AI agents. This not only increases throughput but also significantly reduces burnout on security staff. Human analysts can then focus on higher-level strategy, complex investigations, and creative problem-solving that truly require human insight. Organizations often find that after introducing AI automation, their teams can finally address proactive improvements that they never had time for before. The consistency of AI handling repetitive tasks also ensures fewer things fall through the cracks, ultimately strengthening the organization’s security posture without linear increases in manpower.

Challenges and guardrails

While agentic AI brings powerful capabilities, it also introduces unique challenges and risks that organizations must address. Proper guardrails and governance are essential to harness these autonomous systems safely and effectively. Key challenges and corresponding guardrails include:

-

Safety and alignment – A foremost concern is ensuring AI agents act in alignment with human values, intent, and safety requirements. An autonomous agent, if misdirected, could take actions that cause disruptions or harm (for example, deleting critical files in an attempt to stop an attack). To prevent this, agents must be designed for alignment – they should have clear objectives and constraints that reflect security policy and ethical guidelines. One guardrail is the principle of least privilege: restrict what systems or data the agent can access or modify to only what it truly needs. This way, even if it behaves unexpectedly, the blast radius is limited. Another practice is extensive testing of the agent with various scenarios to ensure it responds safely. Fail-safes are also critical – for instance, if an agent is about to execute a potentially dangerous action, having an approval gate (as discussed) or a second validation agent double-check the move can avert missteps. Keeping humans in the loop, especially for irreversible or high-impact decisions, helps maintain control. Essentially, alignment challenges are addressed by combining technical guardrails (permissions, allowlists, rule-based checks) with oversight mechanisms so that the AI’s autonomy is always bounded by what the organization considers safe and acceptable behavior.

-

Hallucinations and errors – Many agentic AIs rely on large language models or other predictive algorithms that can sometimes produce incorrect or fabricated outputs (a phenomenon known as hallucination in AI). In a cybersecurity context, a hallucination might mean an AI incorrectly identifying a benign file as malware because of coincidental patterns or generating a false incident report. If not checked, these errors could lead the AI agent to take wrong actions or mislead human analysts. Guardrails to manage this include implementing validation steps for the agent’s critical outputs. For example, if an agent writes a summary or recommendation, another agent can verify factual correctness against known data. Some platforms introduce a separate “validator” agent whose sole job is to catch mistakes or policy violations in the primary agent’s output. Hallucination guardrails might involve using more deterministic checks for certain decisions – e.g. the AI can suggest a response, but a rule-based engine confirms that the triggering condition is indeed present. Moreover, organizations use content filters (to ensure the AI isn’t introducing anything toxic or irrelevant) and – if the AI expresses low confidence or the scenario is novel, it could automatically escalate to a human or cross-verify with an alternate method. The key is acknowledging that AI can make mistakes, so there need to be safeguards to detect and correct those errors before they cause harm. Over time, continuous learning should reduce the error rate, but guardrails serve as a safety net when the AI’s judgment is not 100% reliable.

-

Policy compliance and ethical use – With autonomous systems, there’s a risk they might violate regulatory or internal policies if not properly guided. For instance, an agent might pull sensitive personal data into an analysis or share information externally in a way that breaches privacy laws, or perhaps enforce a security measure that inadvertently discriminates against a set of users (ethical bias issue). Ensuring regulatory compliance and ethical operation is thus paramount. One solution is to embed compliance checks directly into the agent’s decision process: e.g., before an agent publishes a report, it could run a PII detection guardrail to redact personal data. Enterprises are also integrating policy engines (like Open Policy Agent) such that any action the agent considers is evaluated against formal policies – only if it passes, the agent can proceed. This is analogous to unit tests or business rule validation, but in real-time for agent actions. Additionally, alignment guardrails (as defined by McKinsey and others) ensure the agent’s outputs stay within expected bounds – for example, that it doesn’t stray from its intended use case or start giving advice outside its domain. On the ethical side, bias mitigation is important: training and testing the AI on diverse datasets and scenarios helps, as does transparency (having the AI explain its reasoning) so humans can spot unfair biases. Many organizations also establish an AI governance committee or framework to oversee deployments, addressing questions like how decisions are audited and how to handle responsibility if the AI makes a wrong call. In summary, strong governance guardrails (legal, ethical, and policy-based) must be in place so that autonomous agents augment security without becoming a compliance liability or ethical risk.

-

Oversight and control – Handing over tasks to AI agents doesn’t mean abdication of responsibility. Security teams need visibility into what agents are doing and the ability to intervene or adjust as needed. Challenges here include a lack of transparency (the “black box” problem) and potential over-reliance on AI without proper monitoring. To counter this, organizations implement extensive observability and logging for agentic systems. Every action taken by the agent should be logged with detail (what it did, why, what tool was used, etc.). These logs serve both for real-time monitoring and after-the-fact audits. Dashboards are often set up to track agent activity, success/failure rates, and performance metrics. If an agent is stuck in a loop or failing repeatedly, the system can alert an operator to disable that agent. Regular review of agent decisions by humans (at least in a sample or when the AI is new) helps ensure it’s performing as expected. Another control is the ability to tune or update the agent’s policies easily – e.g., security teams might discover they need to refine the conditions under which an agent quarantines a device, and they should be able to do so without retraining an entire model (having configurable rules or thresholds feeding into the agent’s logic helps here). Guardrails like requiring approval for certain actions (as discussed) are part of this, but it’s also about cultural readiness – the security team should treat the AI agent as a junior colleague that still needs supervision and mentorship. With such oversight in place, organizations can enjoy the efficiency of autonomy while remaining in ultimate control of their security posture.

Implementing these guardrails ensures that the powerful autonomy of agentic AI is balanced with safety, reliability, and accountability. Many toolkits and frameworks now offer built-in guardrail features – from content filters to role-based access control for agents – reflecting the industry’s recognition that you should “trust but verify” when deploying AI agents. By proactively addressing the challenges of hallucination, alignment, compliance, and control, security leaders can confidently integrate agentic AI into their operations and mitigate risks of unintended consequences.

How LeewayHertz integrates AI and cybersecurity to build enterprise-ready solutions

LeewayHertz combines deep cybersecurity expertise with advanced AI capabilities to help enterprises address the evolving threat landscape while accelerating digital transformation. Through ZBrain Builder, its proprietary agentic AI orchestration platform, LeewayHertz builds intelligent agents that complement its cybersecurity services, enabling adaptive defense mechanisms, compliance automation, and secure AI deployments.

Strategic cybersecurity advisory enhanced by AI

LeewayHertz provides tailored cybersecurity consulting across zero-trust architectures, AI governance, risk management, and compliance frameworks like SOC 2, HIPAA, and GDPR. ZBrain agents augment this advisory layer by automating risk assessments, compliance checks, and control validations, enabling faster, more consistent evaluation workflows.

Proactive threat detection and incident response

In managed security environments, LeewayHertz employs AI-driven detection systems to identify anomalies, behavioral deviations, and threat patterns across enterprise surfaces. Solutions built by ZBrain Builder enhance SOC operations through agent-based alert triage, automated incident documentation, and threat modeling—improving mean time to detect and respond (MTTD/MTTR).

Securing AI systems by design

AI security is a first-class concern. LeewayHertz employs secure AI engineering practices—from threat modeling and adversarial testing to bias audits and secure prompt injection defense. With ZBrain Builder, organizations can deploy self-monitoring LLM agents, validate outputs for compliance, and manage data lineage securely in real time.

Data governance with AI-driven automation

Data discovery, classification, and DLP are enhanced by ZBrain agents that continuously tag sensitive assets and generate automated compliance reports. This supports secure data engineering pipelines, reducing manual effort while improving data privacy posture.

Infrastructure and identity security at scale

Across hybrid cloud networks, ZBrain agents monitor system logs and network telemetry to detect policy violations, unauthorized access, or misconfigurations. Combined with IAM strategy and anomaly detection services, these capabilities ensure end-to-end protection across identity and infrastructure layers.

Enabling AI-powered digital resilience

Finally, LeewayHertz helps enterprises deploy GenAI securely by conducting feasibility assessments, red-teaming exercises, and LLM-based agent development. ZBrain Builder acts as the foundation for building scalable, auditable, and compliant AI workflows, enabling organizations to harness innovation without compromising security or governance.

ZBrain Builder’s approach to agentic AI in cybersecurity

To illustrate how these patterns and principles come together in practice, let’s look at ZBrain Builder, an enterprise agentic AI platform designed for building and orchestrating agentic AI applications. ZBrain Builder provides a unified environment to implement autonomous agents and workflows, specifically suited to complex domains like cybersecurity. Below, we map its capabilities to the agentic AI patterns and use cases discussed above:

Low-code flows

ZBrain Builder offers a visual, low-code interface to design Flows, which are essentially orchestrated workflows. Security teams can drag-and-drop nodes representing triggers, actions (API calls, model invocations), and decision logic onto an interface. This aligns with the graph-based workflow pattern: each Flow is a directed graph of steps (including conditionals, loops, and even human-in-loop approval nodes).

For example, one could create a “Phishing Response Flow” with nodes to extract indicators from an email, query an intelligence database, then branch – auto-delete the email if malicious indicators are confirmed, or escalate to an analyst if unclear. The low-code aspect means you don’t have to write code for the logic; you configure it visually. This makes designing and adjusting complex security playbooks much faster and more accessible to a range of users (from developers to analysts). The flow engine provides the structured orchestration backbone, ensuring each stage is explicit and auditable, which is crucial for governance.

AI agents and agent crews

Within ZBrain Builder, you can build LLM-based agents that carry out tasks using large language models under the hood for reasoning and even group them into Agent Crews. An Agent Crew is ZBrain’s implementation of the multi-agent team pattern. You might have a crew composed of a Supervisor Agent and several Sub-ordinate Agents, each specialized – for example, one agent to ingest and summarize logs, another to perform a malware analysis via an external API, and a third to compose a report. ZBrain Builder allows you to visually map out this structure: who the supervisor is, how tasks are delegated, and data flow between agents. It also supports parallel execution, so multiple agents can operate concurrently when possible.

In a cybersecurity scenario, you could instantiate an “Alert Triage Crew” where the supervisor agent receives an alert and spawns child agents to gather context from different tools (SIEM, EDR, IAM logs), then another to collate the findings. The platform handles the orchestration of the crew and the consolidation of results. This approach encapsulates the divide-and-conquer strategy: rather than one agent trying to do everything, each agent in the crew is simpler and focused, and ZBrain Builder coordinates them to complete the task. The end-to-end traceability is maintained since the entire crew operates under one orchestrated Flow (with logs of each agent’s contributions).

Tool integrations

ZBrain Builder comes with a library of connectors that let agents invoke external tools and APIs securely. This is key for cybersecurity use cases, where the agent needs to interact with many systems (firewalls, ticketing systems, databases, threat intelligence APIs, etc.).

In ZBrain Builder, you can assign specific tools or integrations to specific agents in a crew. For example, you might give an “Intelligent Agent” access to a VirusTotal API integration, and a “Forensics Agent” access to a database connector for querying audit logs. Each agent thereby has its own toolbelt, and the platform manages authentication and permissions for those tool calls. Under the hood, when an agent decides to take an action like “get latest vulnerability scan results”, ZBrain Builder executes that through the integrated connector and returns the data to the agent. The platform’s secure connector framework ensures these calls are safe (using stored credentials, audit-logging the calls, etc.). In essence, ZBrain Builder makes tool usage a first-class capability for agents, mapping to the agents with the tool use pattern discussed. Security teams can plug in whatever internal systems they have (via API, database, etc.) so the agents can leverage them. This greatly extends what the AI can do – from simply analyzing text to actually interrogating systems and applying changes. And because it’s centrally managed, you maintain oversight on every external action an agent takes (all such interactions are logged with the MCP page).

Agentic orchestration framework and memory

ZBrain Builder supports advanced orchestration frameworks, including LangGraph, Google ADK, Microsoft Agent Framework and Microsoft Semantic Kernel, which enable stateful agent workflows. By using these frameworks, ZBrain Builder allows agents and multi-agent crews to maintain context (memory) across multiple steps and even across multiple agents. This means intermediate results or decisions can be passed along and recalled later in the flow.

For example, an agent in step 1 might fetch some user details, and a later agent in step 4 can access that information to make a decision – all handled via the platform’s context propagation. The stateful memory capability is crucial for reasoning loops (like ReAct): ZBrain Builder can retain the chain-of-thought or conversation state for an agent so that it doesn’t start from scratch each step. The platform’s adoption of LangGraph implies you can design complex reasoning patterns where, say, an agent can loop over a subgraph of actions while storing what it learned in each iteration.

For cybersecurity tasks, this is beneficial in scenarios such as incident investigation, where the agent must remember the clues it has collected as it goes through various tools. Additionally, because ZBrain Builder can integrate with knowledge bases (vector stores, graph databases, etc.) and allow agents to retrieve information on demand, the agents have an extended memory of enterprise knowledge. They can look up past incident reports or known threat signatures as part of their reasoning. In summary, ZBrain Builder’s LangGraph and memory features map to the patterns of stateful reasoning and long-term context, ensuring that agents operate with awareness of prior steps and relevant history – just as a human analyst would remember earlier findings during an investigation.

Observability and debugging

Recognizing the need for oversight, ZBrain Builder places heavy emphasis on observability of agentic workflows. The platform provides dashboards where you can watch agents think and act in real time, and logs every action and decision they make. For each run of a Flow or Agent Crew, you can trace the sequence of steps: which agent did what, what outputs were produced, and how the supervisor decided to proceed. This addresses the transparency challenge – instead of the agent being a black box, ZBrain Builder’s interface surfaces the chain-of-thought and the interactions.

Optimize Your Operations With AI Agents

Optimize your workflows with ZBrain AI agents that automate tasks and empower smarter, data-driven decisions.

From a security team perspective, this means during an incident you could replay what the AI did to validate it made the right calls. For debugging or audit, you have a record of each decision (e.g., “Agent X marked this alert as false positive because Y”). The platform also allows setting up alerts and monitors on the agent processes themselves. The observability extends to performance metrics too – token usage of the LLM, execution latency of each step, success rates, etc., are tracked. All of this gives security operations teams the confidence that they can trust but verify the AI: they have full visibility and can step in if something looks off. ZBrain Builder essentially builds the control tower for agentic AI, aligning with best practices of logging and monitoring as guardrails. This strong observability is a differentiator in enterprise settings where compliance and reliability are as important as raw AI capability.

Policy enforcement and RBAC

ZBrain Builder includes built-in features to enforce security policies and role-based access control (RBAC) on AI agents. Each agent or connector can be scoped with permissions – for example, an agent might only be allowed to read from a database, but not write, or only allowed to call certain safe APIs. By enforcing least privilege on agents, ZBrain Builder ensures that even if an agent were to attempt an action outside its bounds, it technically cannot complete it. The platform also integrates with enterprise identity systems (SSO), so you can control which human users can deploy or trigger certain flows or agents, adding an extra layer of governance. In short, ZBrain Builder embeds governance into the fabric of agent workflows: security rules, approvals, and RBAC are not afterthoughts but integral options when constructing your agentic AI solutions. This ensures that the powerful autonomy of agents is always operating within the guardrails defined by the organization’s security leadership.

How LeewayHertz helps in solving cybersecurity use cases with ZBrain AI agents

LeewayHertz leverages its deep cybersecurity expertise and the ZBrain Builder platform to build specialized AI agents and solutions that automate, streamline, and strengthen security operations. These intelligent agents embed contextual analysis, real-time detection, and decision-making across the cybersecurity lifecycle—helping enterprises move from reactive to proactive defense models. Below is a summary of key cybersecurity use cases and how ZBrain agents enable faster, smarter, and scalable solutions.

Strategic cybersecurity & AI resilience advisory

LeewayHertz partners with enterprises to assess, strengthen, and future-proof their cybersecurity posture. Our consulting practice brings deep expertise in zero-trust architecture, cloud security, regulatory alignment, and AI governance. Whether modernizing infrastructure, improving compliance, or preparing for AI-driven security challenges, our advisory services deliver actionable strategies. Powered by ZBrain agents, we embed intelligent automation across cybersecurity assessments and remediation planning, enabling faster insights and long-term resilience.

|

Use case |

Description |

How ZBrain agents can help |

|---|---|---|

|

Security posture assessment |

Evaluates current infrastructure, processes, and controls to identify gaps and improvement areas. |

ZBrain agents can analyze configuration data and logs to summarize exposure risks and prioritize remediation actions. |

|

Zero-trust architecture design |

Helps organizations adopt least-privilege access and segmentation strategies for improved perimeter control. |

ZBrain agents can simulate identity workflows, detect over-privileged roles, and validate policy enforcement. |

|

AI governance readiness |

Prepares enterprises to securely adopt GenAI and LLM technologies while meeting policy and regulatory needs. |

ZBrain agents can assess model access risks, prompt injection exposure, and compliance gaps in AI systems. |

|

Regulatory compliance planning |

Aligns security programs with ISO 27001, SOC 2, HIPAA, GDPR and other frameworks. |

ZBrain agents can monitor compliance coverage and generate audit-ready reports. |

|

Third-party risk management |

Identifies vulnerabilities across vendors and supply chain partners. |

ZBrain agents can automate document reviews, evaluate risk signals, and surface anomalies for procurement teams. |

AI-augmented managed security & operations with ZBrain agents

LeewayHertz enhances enterprise security operations through AI-driven managed security services, integrating ZBrain agents to automate detection, response, and monitoring. By combining intelligent automation with real-time analytics, they deliver proactive threat management and operational resilience across IT, cloud, and network environments. Below is a summary of key security use cases, their descriptions, and how ZBrain agents augment each scenario.

|

Use case |

Description |

How ZBrain agents can help |

|---|---|---|

|

Managed detection & response |

Detects threats in real time using AI-driven analytics. |

ZBrain agents can automate threat correlation, anomaly detection, and alert triage, reducing false positives and accelerating response time. |

|

SOC (Security Operations Center) |

Monitors and manages incidents 24/7. |

ZBrain agents can support log analysis, trigger-based alerting, and report generation to maintain ongoing SOC visibility. |

|

NOC (Network Operations Center) |

Monitors and detects network performance faults proactively. |

ZBrain agents can continuously scan for latency patterns, suspicious traffic spikes, and predictive failure indicators. |

|

Cloud & IT security operations |

Monitors security posture. |

ZBrain agents can identify misconfigurations, excessive permissions, and unencrypted data flows for remediation and compliance. |

AI-driven detection, monitoring & analytics with ZBrain agents

Modern cybersecurity demands rapid detection, intelligent analysis, and precise threat prioritization—often across vast volumes of telemetry. LeewayHertz enhances enterprise threat detection and monitoring strategies by embedding ZBrain agents into core analytics workflows. These AI agents can augment existing SIEM, UEBA, and threat intelligence systems with contextual reasoning, behavioral analysis, and automated correlation—enabling faster, more accurate threat detection and response.

|

Use case |

Description |

How ZBrain agents help |

|---|---|---|

|

SIEM Optimization |

Enhances event correlation, alert triage, and threat prioritization through large-scale telemetry. |

ZBrain agents can enrich SIEM pipelines with LLM-assisted correlation, summarization, and contextual alert scoring to surface high-priority threats faster. |

|

AI-augmented threat intelligence |

Enriches IOCs (Indicator of Compromise) with predictive scoring and context to anticipate and prioritize emerging threats. |

ZBrain agents can continuously analyze threat feeds and match IOCs with current telemetry to generate actionable intelligence. |

Security automation with ZBrain agents

In high-velocity threat environments, automation is not just a productivity gain—it’s a necessity. LeewayHertz utilizes ZBrain agents to power advanced security automation, replacing manual SOC tasks with intelligent orchestration, guided investigation, and real-time decision support. These agents help security teams reduce operational fatigue, accelerate response, and standardize incident handling across tools and teams.

|

Use case |

Description |

How ZBrain agents can help |

|---|---|---|

|

SOAR playbook automation |

Automates incident response tasks, alert correlation, and playbook execution across tools. |

ZBrain agents can execute response actions, triage alerts, and coordinate remediation flows—reducing MTTR and human error. |

|

SOC support agents |

Streamlines incident workflows to reduce analyst burden and improve SOC efficiency. |

ZBrain agents can handle triage, ticket creation and post-incident reporting with consistent logic and speed. |

|

Analyst Copilots |

Assists human analysts with contextual insights, summaries, and reports automation. |

ZBrain copilot agents can generate executive-ready summaries, recommend actions, and preserve investigation history for reuse. |

Proactive risk reduction with AI-powered vulnerability management

LeewayHertz leverages AI-driven tools and ZBrain agents to modernize vulnerability and exposure management. By automating discovery, prioritization, and remediation workflows, their solutions reduce manual load, shorten exposure windows, and enable continuous validation of defenses. From predictive threat scoring to autonomous testing and monitoring, they deliver a resilient, risk-aware security posture.

Optimize Your Operations With AI Agents

Optimize your workflows with ZBrain AI agents that automate tasks and empower smarter, data-driven decisions.

|

Use case |

Description |

How ZBrain agents can help |

|---|---|---|

|

Vulnerability prioritization |

Identifies and focuses on exploitable risks with the highest business impact. |

ZBrain agents can correlate scan results with threat intel to prioritize fixes and trigger patching workflows. |

|

|

Simulates real-world attacks to validate the effectiveness and resilience of controls. |

ZBrain agents can assist red teams by running AI-guided reconnaissance and attack pattern simulations. |

Strengthening digital perimeter visibility and third-party risk defense

LeewayHertz combines automated discovery with AI-powered analysis to safeguard enterprise boundaries and ecosystems. Their solutions help organizations maintain visibility into their evolving external footprint and assess third-party exposures with precision. Using ZBrain agents, LeewayHertz brings speed, context, and continuity to external risk monitoring and mitigation.

|

Use case |

Description |

How ZBrain agents can help |

|---|---|---|

|

Attack surface monitoring |

Continuously identifies and classifies external-facing assets, domains, and services. |

ZBrain agents can automate asset discovery, run DNS analysis, and alert on unknown or risky endpoints. |

|

Third-party risk analysis |

Assesses vulnerabilities across vendors and supply chain partners to reduce inherited risk. |

ZBrain agents can evaluate supplier data, assess security ratings, and flag non-compliant or high-risk entities. |

Accelerating recovery through AI-powered incident handling

LeewayHertz helps enterprises move from detection to resolution faster with AI-enabled incident response and remediation strategies. By combining automation, simulation, and workflow orchestration, they empower security teams to minimize damage, eliminate vulnerabilities, and strengthen post-incident resilience. ZBrain agents support each stage with contextual insights and operational consistency.

|

Use case |

Description |

How ZBrain agents can help |

|---|---|---|

|

Incident response |

Rapidly detects, contains, and investigates security incidents to reduce impact. |

ZBrain agents can assist with alert triage, root cause analysis, and guided containment actions. |

|

Resilience readiness |

Simulates threats to test recovery and backup strategies before real-world breaches occur. |

ZBrain agents can model breach scenarios and assess response plans for gaps and optimization. |

Strengthening cyber and AI posture through proactive risk assessments

LeewayHertz delivers advanced cyber and AI risk assessments to help enterprises quantify exposure, validate security controls, and prepare for evolving threats. Their methodology spans risk modeling, AI red teaming, and evaluations of secure LLM deployment. ZBrain agents support these processes by automating simulations, testing, and continuous monitoring—driving faster insights and safer AI integration.

|

Use case |

Description |

How ZBrain agents can help |

|---|---|---|

|

Cyber risk assessment |

Uses AI-based models to quantify enterprise exposure and translate risks into actionable business KPIs. |

ZBrain agents can automate risk scoring, generate dashboards, and flag high-impact vulnerabilities. |

|

SecOps readiness evaluation |

Assesses team maturity, tool alignment, and process efficiency for AI-integrated security operations. |

ZBrain agents can benchmark operational workflows and suggest areas for automation and upskilling. |

|

AI red team simulation |

Simulates adversarial attacks on AI/LLM systems to uncover manipulation, fairness, and evasion vulnerabilities. |

ZBrain agents can emulate prompt injections, test adversarial robustness, and generate exploitability reports. |

|

Secure LLM assessments |

Evaluates LLM systems for privacy, security, and compliance readiness from data pipelines to inference layers. |

ZBrain agents can scan for leakage vectors, enforce prompt guardrails, and automate pipeline threat modeling. |

This agent-driven approach empowers security teams to scale efficiently, reduce operational overhead, and achieve real-time situational awareness. Whether it’s optimizing SOC workflows, improving detection precision, or securing LLM-based agent deployments, LeewayHertz’s AI-powered solutions align cybersecurity strategy with operational execution.

Conclusion

Agentic AI represents a profound shift in cybersecurity – from static defenses and manual operations towards adaptive, autonomous, and intelligent security workflows. By enabling AI to not only inform but also act, organizations can achieve a level of speed and proactivity that was previously impossible. Threats that propagate fast can be countered instantly. Massive datasets that overwhelm human analysts can be continuously monitored by tireless AI sentinels. Moreover, these agents learn and improve, offering a path to keep pace with attackers who are also leveraging automation and AI. The promise of agentic AI in cybersecurity is a stronger security posture: one where responses are faster, coverage is broader, and humans are focused on strategy and oversight rather than triage drudgery.

That said, realizing this promise requires innovation with governance. The strategic edge will go to those who harness agentic AI through robust platforms that provide control and transparency. This is where platforms like ZBrain Builder play a pivotal role. They allow enterprises to deploy autonomous agents confidently by providing the necessary scaffolding – from orchestration and memory to policy enforcement and auditing – all in one place. Instead of coding a custom AI agent from scratch and risking uncontrolled behavior, security teams can leverage such platforms to gain pre-built best practices, safe AI integration, built-in guardrails, and enterprise-grade monitoring. This dramatically shortens the adoption curve for agentic AI.

In closing, agentic AI in cybersecurity is more than a buzzword – it’s the next evolution in how we defend our digital assets, combining the tireless precision of machines with reasoning capabilities approaching human expertise. Early adopters are already seeing benefits in breach prevention and operational efficiency. As cyber threats and AI technologies continue to advance, embracing agentic AI will become not just an advantage but a necessity. Organizations equipped with platforms like ZBrain Builder will be positioned to transform their SOCs, operating with intelligent agent swarms at scale. The future of cybersecurity will be human and AI collaboration, where autonomous agents handle execution at machine speed while humans provide strategic direction and oversight.

Want to unlock real-time defense, automated threat handling, and adaptive SecOps workflows? Explore how ZBrain Builder brings agentic AI to life in your cybersecurity stack. Book a demo!

Start a conversation by filling the form

All information will be kept confidential.

FAQs

What is agentic AI, and how does it apply to cybersecurity?

Agentic AI refers to systems composed of autonomous, goal-directed agents that can reason, decide, and act within specific environments. In cybersecurity, AI agents enable automated monitoring, real-time threat detection, incident triage, and adaptive response workflows. Unlike static rule-based systems, agentic AI can continuously learn from evolving threat vectors, prioritize alerts based on impact, and orchestrate dynamic defense mechanisms across endpoints, networks, and applications.

Why is agentic AI more effective than traditional automation for SecOps?

Traditional automation follows predefined workflows, lacking contextual awareness or adaptability. Agentic AI, by contrast, incorporates reasoning capabilities and tool orchestration, allowing it to adjust to new threats, assess intent, and take nuanced actions. This is crucial in SecOps, where the speed and complexity of attacks require decisions beyond fixed scripts, such as correlating logs, invoking external tools, and escalating incidents only when confidence thresholds are met.

How does agentic AI contribute to DevSecOps pipelines?

In DevSecOps, AI agents can proactively scan code repositories, monitor CI/CD pipelines, and validate policy compliance in real time. Agents can automatically trigger remediation workflows, alert relevant developers, or gate deployments when vulnerabilities are detected. This intelligent integration minimizes manual overhead, reduces mean time to resolution (MTTR), and embeds security seamlessly into the software development lifecycle.

How does agentic AI contribute to DevSecOps pipelines?

In DevSecOps, AI agents can proactively scan code repositories, monitor CI/CD pipelines, and validate policy compliance in real time. Agents can automatically trigger remediation workflows, alert relevant developers, or gate deployments when vulnerabilities are detected. This intelligent integration minimizes manual overhead, reduces mean time to resolution (MTTR), and embeds security seamlessly into the software development lifecycle.

What types of agents are used in agentic cybersecurity architectures?

Typical agent types include:

-

Threat detection agents – analyze network or endpoint behavior to flag anomalies.

-

Vulnerability management agents – monitor assets for known CVEs and misconfigurations.

-

Incident response agents – orchestrate investigation steps and containment.

-

Compliance validation agents – enforce regulatory checks (e.g., HIPAA, PCI-DSS).

-

Knowledge agents – retrieve documentation or past incident data to assist SOC analysts.

How does LeewayHertz use agentic AI to solve cybersecurity challenges?

LeewayHertz leverages its ZBrain Builder platform to build intelligent AI agents that automate and optimize key cybersecurity operations. These agents assist with threat detection, alert triage, incident response, vulnerability prioritization, compliance reporting, and more. By embedding LLM-powered reasoning into security workflows, LeewayHertz helps organizations manage complex, high-velocity security environments, streamlining workflows and enabling more informed decision-making.

How does ZBrain Builder support agentic AI for cybersecurity?

ZBrain Builder provides a low-code environment to create cybersecurity agents that can monitor logs, query threat intelligence databases, validate access patterns, or orchestrate response playbooks. With Flow-based orchestration and modular tool integration, ZBrain Builder enables parallel, multi-agent workflows capable of multi-hop reasoning—crucial for complex incident analysis. Agents can use connectors to interact with SIEM, IAM, and EDR systems, ensuring contextual, secure decision-making.

Can ZBrain agents be integrated into existing security tools?

Yes. ZBrain agents can be connected to enterprise SIEMs (e.g., Splunk, Microsoft Sentinel), ticketing systems (e.g., ServiceNow, Jira), vulnerability scanners, and identity platforms through API connectors. This allows ZBrain’s AI agents to enhance and extend current security infrastructure without replacing it.

What role do explainability and auditability play in agentic AI for cybersecurity?

In regulated environments, every action taken by an AI agent must be explainable and traceable. ZBrain Builder logs all prompts, outputs, and tool calls. Human-in-the-loop checkpoints can be added at decision nodes to ensure policy alignment. These capabilities satisfy compliance needs and improve trust in autonomous decision-making.

Can agentic AI scale to support large enterprise cybersecurity operations?

Absolutely. Agentic AI scales horizontally by distributing responsibilities across specialized agents. ZBrain’s orchestration layer ensures these agents can run concurrently, adapt to workload demands, and support enterprise-wide observability. This distributed design is particularly effective in managing the volume and velocity of data in modern security operations centers (SOCs).

How can an organization partner with LeewayHertz to implement agentic AI in cybersecurity?

To explore how LeewayHertz can support your cybersecurity initiatives with agentic AI, contact us at sales@leewayhertz.com. Our team will start by consulting, identifying organizational gaps and finding where AI can add value, guiding you through our security-focused solutions and discussing how intelligent AI agents can help address your organization’s unique risk posture, regulatory mandates, and operational challenges. Please include your organization’s name, industry, and key cybersecurity priorities to help us tailor the conversation. We look forward to building scalable, AI-driven systems that strengthen resilience and streamline security operations.

Insights

ModelOps: An overview, use cases and benefits

ModelOps, short for Model Operations, is a set of practices and processes focusing on operationalizing and managing AI and ML models throughout their lifecycle.

How attention mechanism’s selective focus fuels breakthroughs in AI

The attention mechanism significantly enhances the model’s capability to understand, process, and predict from sequence data, especially when dealing with long, complex sequences.

AI use cases and applications in private equity & principal investment

Investors use AI to analyze vast amounts of financial and non-financial data, identify patterns, and generate predictive models to help them make better investment decisions.