How to use LLMs in synthesizing training data?

Listen to the article

There is an unending quest for rich, diverse, and bias-free data in the dynamic realm of machine learning and artificial intelligence. However, data, as indispensable as it is, often comes with its share of pitfalls — scarcity, privacy concerns, and biases, to name a few. Now, imagine a world where data is abundant, unbiased, and unencumbered by privacy issues? Welcome to the world of synthetic data, a game-changing innovation that is reshaping the data science landscape.

Harnessing the power of Large Language Models (LLMs), a powerful tool capable of understanding, generating, and even refining human-like text, we can generate high-quality synthetic training data and train our models more efficiently. This article delves into how you can utilize LLMs to synthesize training data, offering a unique solution to real-world data challenges. Through this comprehensive guide, we aim to provide you with a deep understanding of LLMs, elucidate the benefits of synthetic data, and most importantly, guide you on how to use LLMs for synthesizing your own training data.

- What are LLMs?

- What is training data in ML and its importance?

- What is synthetic data?

- Synthetic data use cases

- Benefits of synthesizing training data

- Step-by-step guide on using LLMs for synthesizing training data

- How to evaluate the quality of synthesized training data?

What are LLMs?

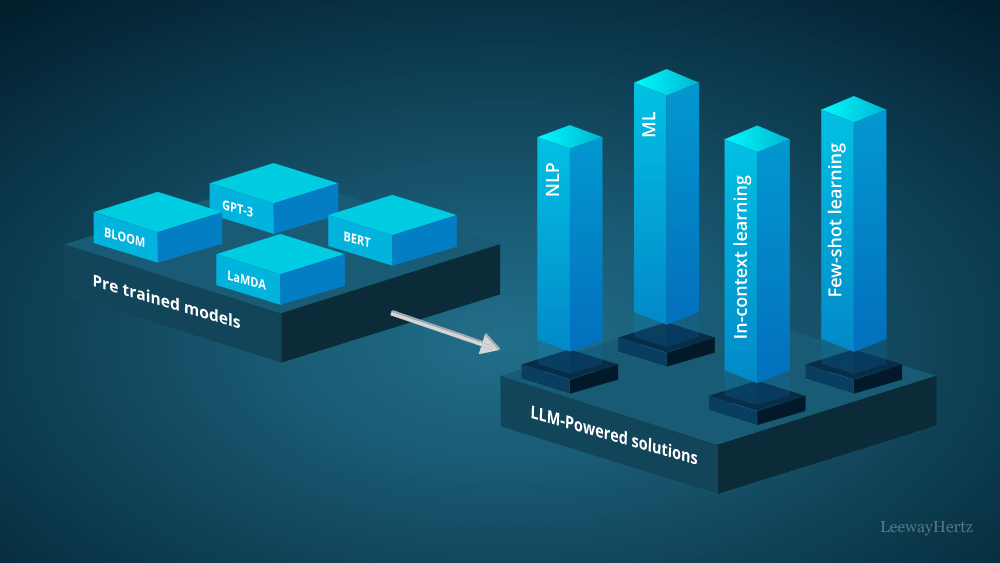

Before we discuss what synthetic data is, it is important to understand what LLMs are. Large Language Models (LLMs) are intricate and sophisticated artificial intelligence tools that learn and generate text-based responses that mimic human language. These AI models are trained on extensive volumes of text data – books, articles, web pages, and more, enabling them to decode and grasp the structure and patterns of language. With deep learning at their core, they perform an array of complex language tasks, generating top-tier results.

The potential of popular LLMs like Google’s BERT, Facebook’s RoBERTa and OpenAI’s GPT series has been leveraged for various tasks like language translation, content creation, and more, showcasing their versatility and effectiveness.

Talking about their applications, LLMs boast an impressive range:

- Language translation: LLMs are great at translating text from one language to another, ensuring accuracy and proficiency.

- Chatbots and conversational AI: They form the backbone of advanced chatbots and conversational AI systems, enabling fluent conversations with users.

- Content creation: Be it articles, summaries, or product descriptions, LLMs can generate contextually relevant and grammatically precise content.

- Text summarization: LLMs have the capacity to condense vast text content into shorter, more manageable summaries.

- Question answering: These models excel in identifying pertinent information from large text bodies to answer questions.

- Sentiment analysis: LLMs can decipher the underlying sentiment in a text, helping companies comprehend customer sentiment towards their offerings.

- Speech recognition: LLMs enhance speech recognition systems by understanding the context and meaning of spoken words more accurately.

LLMs also excel in natural language processing enhancing the accuracy of search engines, improving customer service, and automated content creation. They even facilitate personalizing user experiences and make digital content more accessible.

The hype around LLMs is justified, given their versatile applications. They have redefined chatbot technology by simplifying its creation and maintenance, and their ability to generate varied and unexpected texts is noteworthy. LLMs can even be fine-tuned to perform specific NLP tasks, offering the possibility of building NLP models more cost-effectively and efficiently. Their unique capabilities have rightfully earned them the title of ‘Foundation Models.’ However, these models come with their own set of challenges – they require extensive computational resources, custom hardware, and a vast quantity of training data, making their development and maintenance a costly affair.

What is training data in ML and its importance?

Training data is the lifeblood of Machine Learning (ML) systems. It serves as the foundation upon which these systems learn, understand, and eventually, make predictions. It’s a crucial piece in the complex jigsaw of ML development, without which essential tasks would be impossible to carry out.

At the heart of every successful AI and ML project lies quality training data. It is the key that enables a machine to learn human-like behavior and predict outcomes with higher accuracy. The role of training data in machine learning cannot be understated; it dictates the performance and accuracy of the AI model. Hence, understanding the value of a robust training dataset is pivotal to acquiring the right quantity and quality of data for your machine learning models. The correlation between the quality of training data and the accuracy of the model is direct. As a practitioner, you must realize its importance, and how it influences the selection of an algorithm based on the availability and compatibility of your training dataset.

Prioritizing training data in any AI or ML project is not just a good practice, but a necessary one. Investing in acquiring high-quality datasets will invariably lead to improved outcomes. To illustrate, consider a model being trained to recognize images of cats. The model’s ability to accurately identify a cat in a new image directly depends on the variety, quality, and quantity of cat images it was trained on. Understanding its significance, ensuring its quality, and choosing the right quantity forms the basis of successful AI and ML projects. Always remember, the efficacy of your models is inextricably tied to the quality of your training data.

Here are some of the areas where training data plays a vital role. The quality and success of these applications and processes are directly related to the quality and quantity of the training data.

- Object recognition and categorization

Training data serves a critical role in supervised machine learning, particularly in the recognition and categorization of objects. Consider a scenario where an algorithm must distinguish between images of cats and dogs. In this case, labeled images of both species are required. The algorithm learns to discern the distinctive features between the two species based on this training data, enabling it to recognize and categorize similar objects in the future. If the training data is inaccurate or poor in quality, it could lead to inaccurate results, potentially derailing the success of an AI project.

- Crucial input for machine learning algorithms

Training data is indispensable to the operation of machine learning algorithms. It’s the primary input that provides the algorithm with the information necessary to make decisions akin to human intelligence. In supervised machine learning, the algorithm requires labeled training data as an additional input. If the training data is not appropriately labeled, it diminishes its value for supervised learning. For instance, images must be annotated with precise metadata to be recognizable to machines through computer vision. Therefore, accuracy in labeling the training data is of paramount importance.

- Machine learning model validation

Developing an AI model is just part of the process; it’s equally vital to validate the model to assess its accuracy and ensure its performance in real-life scenarios. Validation or evaluation data is another form of training data, often set aside to test the model’s performance under different circumstances. This data helps verify the model’s ability to make accurate predictions, solidifying its reliability before deployment. Hence, the importance of training data extends beyond just learning; it also plays a key role in ensuring the overall quality and accuracy of the AI model.

Launch your project with LeewayHertz!

Whether you want synthetic data or creative content such as text or images, a versatile LLM can fulfill your needs. Choose our LLM development services for a custom model perfectly suited to your use cases.

What is synthetic data?

Synthetic data refers to data that isn’t collected from the real world, and is created artificially using computer programs or simulations. Like an artist making a replica of a real painting, these computer programs replicate patterns found in real data, but without actually containing any real information.

It’s used often in fields like artificial intelligence and machine learning because it helps overcome certain issues associated with real data. For example, real data can be biased, incomplete, or not diverse enough.

Synthetic data offers a tailored or customized environment for the purpose of training and improving AI and machine learning algorithms. It serves as a simulated practice area where these algorithms can learn, adapt, and develop their capabilities before being deployed in real-world scenarios. Synthetic data is generated to mimic real data but can be controlled and manipulated to provide specific training scenarios and test different scenarios, making it a valuable tool for refining AI and machine learning models. Because it is synthetic, you can create as much data as you need, and customize it to your needs. This is especially helpful when real-world data is hard to come by.

Additionally, synthetic data is a superior choice when it comes to privacy. It can be created in a way that resembles real data, but without including any personal information, such as someone’s name or address. This means AI researchers can use it to train their models without risking anyone’s privacy.

Synthetic data use cases

Synthetic data is transforming machine learning across a range of fields, including computer vision, natural language processing, and beyond. Its applications are vast and diverse:

Facilitating data sharing and collaboration

In sectors where data sharing is essential but hampered by privacy issues, synthetic data acts as a secure alternative. It replicates the statistical properties of real data, enabling organizations to collaborate and share insights without compromising sensitive information. This approach is crucial in research projects or industries like healthcare and finance where data privacy is paramount.

Computer vision applications

Synthetic data plays a pivotal role in training computer vision models. Tasks such as object detection, image segmentation, and facial recognition often require vast and varied datasets. Generating synthetic images with different orientations, positions, and lighting conditions can create comprehensive datasets for training, bypassing the time and cost constraints of collecting real-world data.

Natural Language Processing (NLP)

In NLP, synthetic data aids in balancing datasets and mitigating bias. For tasks like text classification and sentiment analysis, it can generate examples of underrepresented classes, enhancing the model’s ability to process diverse text inputs. This is particularly useful in scenarios where real-world data is skewed towards certain sentiments or topics.

Reinforcement learning

Synthetic data creates diverse environments for reinforcement learning, where agents learn to maximize rewards through interactions. This approach was notably used in training advanced language models like OpenAI’s GPT-3, combining licensed data, human-generated data, and publicly available data to achieve a broad understanding of language.

Healthcare applications

Accessing medical data for research is often restricted due to privacy regulations. Synthetic data provides a solution by simulating patient data, enabling researchers to develop machine learning models for disease prediction and treatment personalization without compromising patient privacy.

Fraud detection in finance

Detecting financial fraud relies on identifying rare events, for which real-world examples might be insufficient. Synthetic data can generate realistic examples of fraudulent transactions, enhancing the model’s ability to recognize such patterns.

Anomaly detection

Widely used in network security and industrial settings, anomaly detection involves identifying unusual patterns. Given the rarity of anomalies, synthetic data can supplement real data to provide a robust training set, improving the detection capabilities of models.

Retail and e-commerce

Synthetic data helps simulate customer behaviors, aiding businesses in forecasting trends, optimizing pricing strategies, and managing inventory effectively.

Gaming

In gaming, synthetic data trains AI agents by generating numerous game scenarios. This approach accelerates learning and effectiveness, compared to relying solely on real gameplay data.

Cybersecurity

Machine learning models in cybersecurity benefit from synthetic data in creating realistic network traffic scenarios, including both normal and malicious activities, for better training against evolving cyber threats.

Drug discovery

The pharmaceutical industry uses synthetic data to predict new drug effectiveness, supplementing limited clinical trial data and accelerating the drug development process.

Each of these applications demonstrates the versatility and value of synthetic data in enhancing machine learning models across diverse industries.

Benefits of synthesizing training data

The use of synthesized data allows for the augmentation of existing datasets with synthetic versions, enhancing the training of various models and algorithms. Essentially, synthesized data acts as a fabricated data pool, aiding in the verification of mathematical models or the training of machine learning models.

Synthesized data is employed across different sectors as a means to omit certain sensitive elements from the original data. In some cases, datasets encompass confidential information that, due to privacy concerns, cannot be publicly shared. Synthetic data provides a solution by producing artificial data that mirrors the original but does not retain any personally identifiable information. This circumvents privacy issues related to using real consumer data without consent or remuneration. Synthetic data offers a privacy-respecting alternative, facilitating the development and testing of algorithms and models without violating confidentiality.

The use of synthesized training data offers numerous benefits, rendering it an invaluable resource for organizations:

- Cost-efficiency: Generating synthetic data sidesteps the high costs of data collection, curation, and storage associated with real-world data. This process eliminates the need for extensive human labor, expensive data collection methods, and storage facilities, making it a more economical choice. Synthetic data generation is a computer-driven process that significantly reduces the cost per data point compared to traditional methods.

- Data privacy and security: Synthetic data is particularly valuable in contexts where using real data can raise privacy concerns or legal issues, such as in healthcare or finance. Since synthetic data doesn’t derive from actual individual records, it inherently complies with privacy regulations like GDPR or HIPAA, eliminating the risk of exposing sensitive personal information.

- Scalability: The ability to produce large quantities of synthetic data on demand offers a scalable solution for testing and training AI models. This is especially useful in scenarios requiring extensive data sets for accurate modeling, such as in complex simulations or when real-world data is scarce or difficult to obtain.

- Diversity of data: Synthetic data generation tools can create varied datasets that reflect different scenarios and conditions, enhancing the robustness of AI models. This diversity ensures that models are not just trained on a narrow slice of potential realities but are exposed to a wide array of possibilities, making them more adaptable and reliable.

- Reduction of bias: Real-world data often contains inherent biases, which can skew AI and ML models. By carefully designing synthetic data, these biases can be minimized, leading to more accurate and fair AI systems. This is crucial for ensuring that AI models perform equitably across different demographic groups and scenarios.

In summary, synthetic data offers a pragmatic and efficient approach for businesses to improve their AI and ML systems, ensuring cost-effectiveness, privacy compliance, scalability, diversity, and reduced bias.

Launch your project with LeewayHertz!

Whether you want synthetic data or creative content such as text or images, a versatile LLM can fulfill your needs. Choose our LLM development services for a custom model perfectly suited to your use cases.

Step-by-step guide on using LLMs for synthesizing training data?

In this example, we will create synthetic sales data for training a sales prediction model. Imagine, we have a new sales prediction app for coffee shops that we want to check using this synthetic data. We explain in the following steps how an LLM can be used to synthesize this data for the model’s training:

Step 1: Choosing the right LLM for your specific application

Selecting the right Language Learning Model (LLM) when synthesizing training data requires careful consideration of a few factors:

- Task requirements: The type of task you want to accomplish greatly influences your choice of LLM. For example, if your task is related to text generation, a sequence-to-sequence model might be the best fit. On the other hand, for classification tasks, a simpler model might suffice.

- Data availability: The amount and quality of data you have at your disposal can influence the complexity of the LLM that you choose. More complex models may require more data for training.

- Computational resources: More sophisticated LLMs require more computational power and memory for training and inference. You need to consider your available resources when choosing a model.

- Privacy concerns: If your data includes sensitive information, you may need to consider a model that can provide better data privacy.

- Accuracy vs. explainability: Some LLMs can offer high accuracy but have low explainability. If your project requires understanding the reasoning behind the model’s predictions, you might need to choose a simpler, more interpretable model.

- Model training time: Training a complex LLM can take a significant amount of time. Depending on the time constraints of your project, you might need to opt for a less complex model that can be trained more quickly.

Finally, it’s a good practice to experiment with a few different models and compare their performance on your specific task. This empirical evaluation can help you find the most suitable LLM for your use case.

We can use various AI tools to create synthetic data for testing apps, building data analysis processes, and making machine learning models. ChatGPT is one powerful LLM among them. We could begin by asking ChatGPT to produce some data for us using the below prompt:

Create a CSV file with 25 random sales records for a coffee shop. Each record should include the following fields: - id (incrementing integer starting at 1) - date (random date between 1/1/2022 and 12/31/2022) - time (random time between 6:00am and 9:00pm in 1-minute increments) - product_id (incrementing integer starting at 1) - product - calories - price in USD - type (drink or food) - quantity (random integer between 1 and 3) - amount (price * quantity) - payment type (cash, credit, debit, or gift card)

The way in which you phrase your query to ChatGPT can significantly alter the output it provides. From the query above, we can expect correct results but they won’t be very helpful for checking our app because they do not come in the right format. Also, ChatGPT can’t make a real CSV file. Plus, it can only give back a certain amount of data. As a rule, ChatGPT can give back answers that are up to 2,048 parts long – that’s the most the GPT-3 model allows.

Instead of making ChatGPT create the synthetic data directly, we could ask it to write a Python script. This script would then generate the synthetic sales data we need. This is a better approach because it can be used on a larger scale. So, let’s instruct ChatGPT to write a Python program to create synthetic sales data similar to our previous requirements.

Write a Python3 code to make a CSV file with 100 sales records for typical coffee shop items. The CSV file should have a header row and each record should have these details: id (a series of numbers starting from 1) date (any random date between 1/1/2022 and 12/31/2022) time (any random time from 6:00am to 9:00pm, changing every minute) product_id (a series of numbers starting from 1) product calories price (in USD) type (either a drink or food) quantity (a random number between 1 and 3) amount (price times quantity) payment type (could be cash, credit, debit, or gift card)

The generated code is as follows:

import csv

import random

from random import randrange

from datetime import timedelta, datetime

# List of possible products and their corresponding details

products = [

{"product_id": 1, "product": "Espresso", "calories": 10, "price": 2.0, "type": "drink"},

{"product_id": 2, "product": "Cappuccino", "calories": 120, "price": 3.5, "type": "drink"},

{"product_id": 3, "product": "Croissant", "calories": 300, "price": 2.5, "type": "food"},

# add more products as needed...

]

payment_types = ["cash", "credit", "debit", "gift card"]

start_date = datetime.strptime("1/1/2022", "%m/%d/%Y")

end_date = datetime.strptime("12/31/2022", "%m/%d/%Y")

def random_date(start, end):

return start + timedelta(seconds=random.randint(0, int((end - start).total_seconds())))

def random_time():

return "{:02d}:{:02d}".format(randrange(6, 21), randrange(0, 60))

with open('sales_data.csv', 'w', newline='') as file:

writer = csv.writer(file)

writer.writerow(["id", "date", "time", "product_id", "product", "calories", "price", "type", "quantity", "amount", "payment_type"])

for i in range(1, 101):

product = random.choice(products)

quantity = randrange(1, 4)

writer.writerow([

i,

random_date(start_date, end_date).strftime("%m/%d/%Y"),

random_time(),

product["product_id"],

product["product"],

product["calories"],

product["price"],

product["type"],

quantity,

quantity * product["price"],

random.choice(payment_types)

])

ChatGPT was able to generate a full Python program to make synthetic sales data with just one short prompt. However, because of ChatGPT’s response size limit, we could only list six items on the coffee shop menu. If we asked for more items, the output and the program would be cut off, which means the program wouldn’t run.

You can provide additional prompts as below:

# Additional prompts: # Create a function to return one random item from a list of dictionaries # containing 15 common drink items sold in a coffee shop including the id, name, calories, price, and type of 'drink'. # Capitalize the first letter of each product name. Start product id at 1. # Create a function to return one random item from a list of dictionaries # containing 10 common food items sold in a coffee shop including the id, name, calories, price, and type of 'food'. # Capitalize the first letter of each product name. Start product id at 16.

To install faker dependencies execute the below code:

pip install faker

The complete generated code will be like this:

import random

import csv

from datetime import datetime, timedelta, time

from faker import Faker

# Function to generate random time

def random_time(start, end):

return start + timedelta(

seconds=random.randint(0, int((end - start).total_seconds())),

)

# Generate drink items

def generate_drink_items():

drinks = [{'id': i+1, 'name': Faker().words(nb=1, unique=True)[0].capitalize(), 'calories': random.randint(50, 200), 'price': round(random.uniform(1, 5), 2), 'type': 'drink'} for i in range(15)]

return random.choice(drinks)

# Generate food items

def generate_food_items():

foods = [{'id': i+16, 'name': Faker().words(nb=1, unique=True)[0].capitalize(), 'calories': random.randint(200, 500), 'price': round(random.uniform(3, 10), 2), 'type': 'food'} for i in range(10)]

return random.choice(foods)

# Generate payment types

def generate_payment_types():

return random.choice(['cash', 'credit', 'debit', 'gift card'])

# Open the CSV file

with open('coffee_shop_sales_chatgpt_data.csv', 'w', newline='') as file:

writer = csv.writer(file)

writer.writerow(["id", "date", "time", "product_id", "product", "calories", "price", "type", "quantity", "amount", "payment type"])

# Generate sales records

for i in range(100):

item = generate_drink_items() if random.choice([True, False]) else generate_food_items()

date = Faker().date_between_dates(date_start=datetime(2022, 1, 1), date_end=datetime(2022, 12, 31)).strftime("%m/%d/%Y")

time = random_time(datetime.strptime('6:00 AM', '%I:%M %p'), datetime.strptime('9:00 PM', '%I:%M %p')).strftime("%I:%M %p")

quantity = random.randint(1,3)

amount = round(quantity * item['price'], 2)

writer.writerow([i+1, date, time, item['id'], item['name'], item['calories'], item['price'], item['type'], quantity, amount, generate_payment_types()])

This code selects from 15 different drink items and 10 different food items, all with unique names, and writes 100 sales records to a CSV file named “coffee_shop_sales_chatgpt_data.csv”.

You can find sample reference code in this Github location.

Copy and paste the code in VS code and run it.

The generated synthetic data will be like this – https://github.com/garystafford/ten-ways-gen-ai-code-gen/blob/main/data/output/coffee_shop_sales_data_chatgpt.csv

Launch your project with LeewayHertz!

Whether you want synthetic data or creative content such as text or images, a versatile LLM can fulfill your needs. Choose our LLM development services for a custom model perfectly suited to your use cases.

Step 2: Training the model with LLM-generated synthetic data

We will train sales prediction model with generated synthesized data. This involves several steps, such as-

- Preprocessing the data

- Splitting it into training and testing datasets

- Selecting a model

- Training the model

- Evaluating its performance

- Using it for prediction.

Below is a high-level description of these steps using Python and popular libraries like pandas and scikit-learn:

Note: The exact code and approach would depend on the specifics of your application, data, and prediction task. The description below assumes a regression task, where you are trying to predict a continuous value like the amount of sales.

Load the data: Start by loading your synthetic data into a pandas DataFrame:

import pandas as pd

data = pd.read_csv('coffee_shop_sales_chatgpt_data.csv')

Preprocess the data: Before training your model, you will need to preprocess your data. This could include:

- Converting categorical variables into numeric variables using techniques like one-hot encoding.

- Normalizing numeric variables so they’re on the same scale.

Here is an example using pandas and scikit-learn:

import numpy as np from sklearn.preprocessing import OneHotEncoder, StandardScaler # One-hot encoding for categorical features encoder = OneHotEncoder() categorical_features = ['product', 'type', 'payment type'] encoded_features = encoder.fit_transform(data[categorical_features]).toarray() # Normalizing numeric features scaler = StandardScaler() numeric_features = ['calories', 'price', 'quantity'] scaled_features = scaler.fit_transform(data[numeric_features]) # Combine the processed features back into a single array X = np.concatenate([scaled_features, encoded_features], axis=1) y = data['amount']

Split the data: You should split your data into a training set and a testing set. This allows you to evaluate your model’s performance on unseen data:

from sklearn.model_selection import train_test_split X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

Train the model: Choose a suitable machine learning model for sales prediction. For instance, a RandomForestRegressor or GradientBoostingRegressor can be used:

from sklearn.ensemble import RandomForestRegressor model = RandomForestRegressor() model.fit(X_train, y_train)

Evaluate the model: Use the testing set to evaluate the model’s performance. A common metric for regression tasks is the mean absolute error (MAE):

from sklearn.metrics import mean_absolute_error

y_pred = model.predict(X_test)

print('Mean Absolute Error:', mean_absolute_error(y_test, y_pred))

Predict sales: With the model trained, you can now use it to predict sales:

# Let's say `new_data` is your new sales data for prediction.

new_data = pd.read_csv('sales_data.csv')

def preprocess(new_data):

# Perform the same preprocessing steps as before

encoded_features = encoder.transform(new_data[categorical_features]).toarray()

scaled_features = scaler.transform(new_data[numeric_features])

# Combine the processed features back into a single array

preprocessed_data = np.concatenate([scaled_features, encoded_features], axis=1)

return preprocessed_data

# Preprocess the new data

preprocessed_data = preprocess(new_data)

# Use the preprocessed data to make predictions

predictions = model.predict(preprocessed_data)

Please note: The feature names should match those that were passed during fit.

This example provides a basic outline of the process. Each step has many possible variations, and the best approach will depend on your specific data, problem, and requirements. For example, you might want to try different preprocessing techniques, machine learning models, and evaluation metrics.

How to evaluate the quality of synthesized training data?

For the adoption of synthetic data in machine learning and analytics projects, it’s not only essential to ensure the synthetic data serves its intended purpose and meets application requirements, but it’s also crucial to measure and ensure the quality of the produced data.

In light of growing legal and ethical mandates for privacy protection, synthetic data’s capability to eliminate sensitive and original information during its generation is one of its key strengths. Therefore, alongside quality, we require metrics to assess the risk of potential privacy breaches, if any, and to ensure that the generation process does not merely replicate the original data.

To address these needs, we can evaluate the quality of synthetic data across multiple dimensions, facilitating a better understanding of the generated data for users, stakeholders, and ourselves.

The quality of generated synthetic data is evaluated across three primary dimensions:

- Fidelity

- Utility

- Privacy

A synthetic data quality report should be able to answer the following questions about the generated synthetic data:

- How does this synthetic data compare with the original training set?

- What is the usefulness of this synthetic data in our downstream applications?

- Has any information been inadvertently leaked from the original training set into the synthetic data?

- Has any sensitive information from other datasets (not used for model training) been unintentionally synthesized by our model?

The metrics translating these dimensions for the end-users can be quite flexible, as the data to be generated can have varying distributions, sizes, and behaviors. They should also be easy to comprehend and interpret.

In essence, the metrics should be completely data-driven, not requiring any pre-existing knowledge or domain-specific information. However, if users wish to implement certain rules and constraints relevant to a particular business domain, they should be able to specify them during the synthesis process to ensure domain-specific fidelity is maintained.

Let’s delve deeper into each of these metrics.

Evaluating fidelity

When we talk about the quality of synthetic data, one of the key aspects we consider is ‘fidelity’, which basically means how closely the synthetic data matches with the original data. We want to make sure the synthetic data is similar enough to the original that it can serve its purpose well.

Let’s break down the ways we measure fidelity:

- Statistical comparisons: This is a way to compare the key features of the original and synthetic data sets. We look at things like the average (mean), middle value (median), spread of data (standard deviation), number of distinct values, missing values, and range of values. We do this for each category of data to see if the synthetic data is statistically similar to the original. If it’s not, we might need to generate the synthetic data again with different settings.

- Histogram similarity score: This score helps us understand how similar the distribution of each feature (or category of data) is in the synthetic and original datasets. If the score is 1, it means the distributions in synthetic data perfectly match with the original.

- Mutual information score: This score tells us how dependent two features are on each other. In other words, it shows us how much information about one feature you can get by looking at another.

- Correlation score: This score tells us how well relationships between two or more columns of data have been preserved in the synthetic data. These relationships are important because they can reveal connections between different pieces of data.

For certain types of data, like time-series or sequential data, we also use additional metrics to measure the quality. For instance, we can look at autocorrelation and partial autocorrelation scores to see how well the synthetic data has preserved significant correlations from the original dataset.

Evaluating utility

In addition to fidelity, the ‘utility’ or usefulness of synthetic data is also important. We need to ensure that the synthetic data performs well on common tasks in data science.

- Prediction score: This is a measure of how well models trained on synthetic data perform compared to models trained on the original data. We compare the outcomes of these models against a testing set of data that hasn’t been seen before. This gives us an idea of how good the synthetic data is in terms of training effective models.

- Feature importance score: This score looks at the importance of different features (or categories of data) and checks if this order of importance is the same in the synthetic and original data. If the order is the same, it means the synthetic data has high utility.

- QScore: This score is used to check if a model trained on synthetic data will give the same results as a model trained on original data. It does this by running random aggregation-based queries on both datasets and comparing the results. If the results are similar, it means the synthetic data has good utility.

Evaluating privacy

Privacy is a significant concern when it comes to synthesizing data. It’s important to protect sensitive information to meet ethical and legal requirements.

- Exact match score: This is a measure of how many original data records can be found in the synthetic dataset. We want this score to be low to ensure privacy.

- Neighbors’ privacy score: This score indicates how many synthetic records are very similar to the real ones, which could be a potential privacy concern. A lower score means better privacy.

- Membership inference score: This score tells us how likely it is that someone could correctly guess if a specific data record was part of the original dataset. The lower this score, the better the privacy.

- Holdout concept: It’s important to prevent the synthetic data from simply copying the original data. To avoid this, a portion of the original data is set aside and used to evaluate the synthetic data. This helps to maintain the balance between data utility and privacy.

Endnote

LLMs have become an increasingly valuable asset for data scientists and researchers, especially when it comes to synthesizing training data. Through the power of advanced machine learning algorithms and generative models, synthetic data can be created to mimic real-world data while maintaining privacy and confidentiality.

While synthetic data generation presents challenges, such as modeling complex data distributions, its potential benefits are evident. Synthetic data finds application in various domains, including training and testing machine learning models, conducting simulations, and performing experiments. As the field of synthetic data generation continues to progress, we can anticipate the emergence of innovative techniques and tools, fueling further advancements in this exciting area of research and development.

Looking to explore LLMs for synthesizing training data? Use our Large Language Model (LLM) development service for easy, unbiased data creation. Contact LeewayHertz’s experts to start your AI journey today!

Listen to the article

Start a conversation by filling the form

All information will be kept confidential.