How to build a generative AI solution: A step-by-step guide

Generative AI has gained significant attention in the tech industry, with investors, policymakers, and the society at large talking about innovative AI models like ChatGPT and Stable Diffusion. According to KPMG research, 72% of U.S. CEOs say generative AI is a top investment priority despite uncertain economic conditions. CB Insights concludes that 2023 was a breakout year for investment in generative AI startups, with equity funding topping $21.8B across 426 deals. Many generative AI companies are securing significant funding and achieving high valuations in venture capital. Recently, Jasper, a copywriter assistant, raised $125 million at a valuation of $1.5 billion, while Hugging Face and Stability AI raised $100 million and $101 million, respectively, with valuations of $2 billion and $1 billion. In a similar vein, Inflection AI received $225 million at a post-money valuation of $1 billion.

These achievements are comparable to OpenAI, which, in 2019, secured more than $1 billion from Microsoft, with a valuation of $25 billion. This indicates that despite the current market downturn and layoffs plaguing the tech sector, generative AI companies are still drawing the attention of investors, and for a good reason.

Generative AI has the potential to transform industries and bring about innovative solutions, making it a key differentiator for businesses looking to stay ahead of the curve. It can be used for developing advanced products, creating engaging marketing campaigns, and streamlining complex workflows, ultimately transforming the way we work, play, and interact with the world around us.

As the name suggests, generative AI has the power to create and produce a wide range of content, from text and images to music, code, video, and audio. While the concept is not new, recent advances in machine learning techniques, particularly transformers, have elevated generative AI to new heights. Hence, it is clear that embracing this technology is essential to achieving long-term success in today’s competitive business landscape. By leveraging the capabilities of generative AI, enterprises can stay ahead of the curve and unlock the full potential of their operations, leading to increased profits and a more satisfied customer base. This is why there has been a notable surge of interest in the development of generative AI solutions in recent times.

This article provides an overview of generative AI and a detailed step-by-step guide to building generative AI solutions.

- What is generative AI?

- Generative AI tech stack: An overview

- Generative AI applications

- How can you leverage generative AI technology to build robust solutions?

- Why build a generative AI solution?

- The right tech stack to build a generative AI solution

- Considerations for choosing the right architecture for building generative AI solutions

- How to build generative AI solution – a step-by-step guide

- Build a generative AI solution: A chat interface using GPT4

- Best practices for building generative AI solutions

- Key strategies for developing high-performing generative AI solutions

- How LeewayHertz’s GenAI orchestration platform, ZBrain simplifies the GenAI solution development process?

- Why should you consider creating an AI impact assessment when designing a generative AI solution?

What is generative AI?

Generative AI enables computers to generate new content using existing data, such as text, audio files, or images. It has significant applications in various fields, including art, music, writing, and advertising. It can also be used for data augmentation, where it generates new data to supplement a small dataset, and for synthetic data generation, where it generates data for tasks that are difficult or expensive to collect in the real world. With generative AI, computers can detect the underlying patterns in the input and produce similar content, unlocking new levels of creativity and innovation. Various techniques make generative AI possible, including transformers, generative adversarial networks (GANs), and variational auto-encoders. Transformers such as GPT-3, LaMDA, Wu-Dao, and ChatGPT mimic cognitive attention and measure the significance of input data parts. They are trained to understand language or images, learn classification tasks, and generate texts or images from massive datasets.

GANs consist of two neural networks: a generator and a discriminator that work together to find equilibrium between the two networks. The generator network generates new data or content resembling the source data, while the discriminator network differentiates between the source and generated data to recognize what is closer to the original data. Variational auto-encoders utilize an encoder to compress the input into code, which is then used by the decoder to reproduce the initial information. This compressed representation stores the input data distribution in a much smaller dimensional representation, making it an efficient and powerful tool for generative AI.

Some potential benefits of generative AI include

- Higher efficiency: You can automate business tasks and processes using generative AI, freeing resources for more valuable work.

- Creativity: Generative AI can generate novel ideas and approaches humans might not have otherwise considered.

- Increased productivity: Generative AI helps automate tasks and processes to help businesses increase their productivity and output.

- Reduced costs: Generative AI is potentially leading to cost savings for businesses by automating tasks that would otherwise be performed by humans.

- Improved decision-making: By helping businesses analyze vast amounts of data, generative AI allows for more informed decision-making.

- Personalized experiences: Generative AI can assist businesses in delivering more personalized experiences to their customers, enhancing the overall customer experience.

Generative AI tech stack: An overview

In this section, we discuss the inner workings of generative AI, exploring the underlying components, algorithms, and frameworks that power generative AI systems.

Application frameworks

Application frameworks have emerged in order to quickly incorporate and rationalize new developments. They simplify the process of creating and updating applications. Various frameworks such as LangChain, Fixie, Microsoft’s Semantic Kernel and Google Cloud’s Vertex AI platform have gained popularity over time. They are being used by developers to create applications that produce novel content, facilitate natural language searches, and execute tasks autonomously, changing the way we work and synthesize information.

Tools ecosystem

The ecosystem allows developers to realize their ideas by utilizing their understanding of their customers and the domain, without needing the technical expertise required at the infrastructure level. The ecosystem comprises four elements: Models, data, evaluation platform, and deployment.

Models

Foundation Models (FMs) serve as the brain of the system, capable of reasoning similar to humans. Developers have various FMs to choose from based on output quality, modalities, context window size, cost, and latency. Developers can opt for proprietary FMs created by vendors such as Open AI, Anthropic, or Cohere, host one of many open-source FMs, or even train their own model. Companies like OctoML also offer services to host models on servers, deploy them on edge devices, or even in browsers, improving privacy, security, and reducing latency and cost.

Data

Large Language Models (LLMs) are powerful technologies but can only reason based on the data they were trained on. Developers can use data loaders to bring in data from various sources, including structured data sources like databases and unstructured data sources. Vector databases help to store vectors effectively, which can be queried in building LLM applications. Retrieval augmented generation is a technique used for personalizing model outputs by including data directly in the prompt, providing a personalized experience without modifying the model weights through fine-tuning.

Evaluation platform

Developers have to balance between model performance, inference cost, and latency. By iterating on prompts, fine-tuning the model, or switching between model providers, performance can be improved across all vectors. Several evaluation tools exist to help developers determine the best prompts, provide offline and online experimentation tracking, and monitor model performance in production.

Deployment

Once the applications are ready, developers need to deploy them in production. This can be achieved by self-hosting LLM applications and deploying them using popular frameworks like Gradio, or using third-party services. Fixie, for example, can be used to build, share, and deploy AI agents in production. This complete generative AI stack is revolutionizing the way we create and process information and the way we work.

Generative AI applications

Generative AI is poised to drive the next generation of apps and transform how we approach programming, content development, visual arts, and other creative design and engineering tasks. Here are some areas where generative AI finds application:

Graphics

With advanced generative AI algorithms, you can transform any ordinary image into a stunning work of art imbued with your favorite artwork’s unique style and features. Whether you are starting with a rough doodle or a hand-drawn sketch of a human face, generative graphics algorithms can transform your initial creation into a photorealistic output. These algorithms can even instruct a computer to render any image in the style of a specific human artist, allowing you to achieve a level of authenticity that was previously unimaginable. The possibilities don’t stop there! Generative graphics can conjure new patterns, figures, and details that weren’t even present in the original image, taking your artistic creations to new heights of imagination and innovation.

Photos

With AI, your photos can now look even more lifelike! Generative AI transforms photography by enhancing realism and infusing artistic elements, offering an array of tools for photo correction and creative transformation. AI algorithms have the power to detect and fill in any missing, obscure, or misleading visual elements in your photos. You can say goodbye to disappointing images and hello to stunningly enhanced, corrected photos that truly capture the essence of your subject. This technology ensures every photo is not just seen but experienced. Crucial capabilities include:

- Realistic enhancements: Detect and correct missing, obscure, or misleading visual elements in photos, transforming them into stunning visuals.

- Text-to-image conversion: Input textual prompts to generate images, specifying subjects, styles, or settings to meet exact requirements.

- High-resolution upgrades: Convert low-resolution photos into high-resolution masterpieces, providing detail and clarity that mimic professional photography.

- Synthetic images: Create natural-looking, synthetic human faces by blending features from existing portraits or abstracting specific characteristics, offering a digital touch of a professional artist.

- Semantic image-to-image translation: Produce realistic versions of images from semantic sketches or photos.

- Semantic image generation: Generate photo-realistic images from simple semantic label maps, turning abstract concepts into vivid, lifelike pictures.

- Image completion: AI can seamlessly fill in missing parts of images, repair torn photographs, and enhance backgrounds, maintaining the integrity of the original photo while improving its aesthetics.

- Advanced manipulation: AI can alter elements like color, lighting, form, or style of images while preserving original details, allowing for creative reinterpretation without losing the essence of the original.

Generative AI not only perfects the photographic quality but also brings an artist’s touch to digital images, making it possible to realize visionary ideas through advanced technology.

Audio

Experience the next generation of AI-powered audio and music technology with generative AI! With the power of this AI technology, you can now transform any computer-generated voice into a natural-sounding human voice, as if it were produced in a human vocal tract. This technology can also translate text to speech with remarkable naturalness. Whether you are creating a podcast, audiobook, or any other type of audio content, generative AI can bring your words to life in a way that truly connects with your audience. Also, if you want to create music that expresses authentic human emotion, AI can help you achieve your vision. These algorithms have the ability to compose music that feels like it was created by a human musician, with all the soul and feeling that comes with it. Whether you are looking to create a stirring soundtrack or a catchy jingle, generative AI helps you achieve your musical dreams.

Video

When it comes to making a film, every director has a unique vision for the final product, and with the power of generative AI, that vision can now be brought to life in ways that were previously impossible. By using it, directors can now tweak individual frames in their motion pictures to achieve any desired style, lighting, or other effects. Whether it is adding a dramatic flair or enhancing the natural beauty of a scene, AI can help filmmakers achieve their artistic vision like never before. The following points summarize the advanced video features enabled by generative AI:

- Automated video editing and composition: Generative AI simplifies complex editing tasks such as sequencing, cutting, and merging clips, automating what traditionally takes hours into mere minutes.

- Animations and special effects: AI tools can create dynamic animations and add visually stunning effects effortlessly, enabling creators to add depth and drama to their narratives without requiring extensive manual work.

- High-quality video creation: AI models can generate videos matching specific themes or styles, providing a base for further creative development.

- Enhanced resolution and manipulation: AI-driven enhancements improve video quality by upgrading resolution, refining visual details, and completing scenes where information may be missing.

- Video style transfers: AI tools can adopt the style of a reference image or video and apply it to new video content to ensure thematic consistency across works.

- Video predictions: AI tools can anticipate and generate future frames in a video sequence, understanding and interpreting the content’s spatial and temporal dynamics, which is crucial for tasks like creating extended scenes from short clips.

Text

Transform the way you create content with the power of generative AI technology! Utilizing generative AI, you can now generate natural language content at a rapid pace and in large varieties while maintaining a high level of quality. From captions to annotations, AI can generate a variety of narratives from images and other content, making it easier than ever to create engaging and informative content for your audience. With the ability to blend existing fonts into new designs, you can take your visual content to the next level, creating unique and eye-catching designs that truly stand out. Here’s how generative AI is applied in various text-related tasks:

- Content creation: Generative AI significantly accelerates the creation of diverse written content such as blogs, marketing posts, and social media updates.

- Language translation: AI models are fine-tuned to perform complex translation tasks, analyzing texts in one language and rendering them in another with high accuracy. This capability is essential for global communication and content localization.

- Virtual assistants and chatbots: Through virtual assistants and chatbots, generative AI helps deliver real-time, contextually appropriate responses during user interactions.

- Content aggregation and summarization: Beyond creating content, generative AI summarizes extensive texts such as research papers, news articles, and long emails. This functionality helps users quickly grasp the essence of bulky documents, facilitating information consumption and management.

- Automatic report generation: In fields like business intelligence and data analysis, generative AI simplifies the interpretation of complex datasets by automatically generating comprehensive reports. These reports highlight critical trends, patterns, and insights, aiding stakeholders in making informed decisions.

Code

Unlock the full potential of AI technology and enhance your programming skills. With AI, you can now generate builds of program code that address specific application domains of interest, making it easier than ever to create high-quality code that meets your unique needs. But that’s not all – AI can also generate generative code that has the ability to learn from existing code and generate new code based on that knowledge. This innovative technology can help streamline the programming process, saving time and increasing efficiency.

Here’s a look at how generative AI applications are making an impact:

- Code generation: Trains AI models on extensive codebases to enable them to generate functions, snippets, or entire programs from prompted requirements. This helps accelerate development by automating repetitive tasks, allowing developers to concentrate on problem-solving and architectural design.

- Code completion:

- Enhances coding efficiency with intelligent code completion tools that predict subsequent lines of code based on the current context.

- Integrated into IDEs (Integrated Development Environments), these tools expedite the coding process and minimize coding errors.

- Natural language interfaces for coding: Facilitates the interaction between developers and software systems through human language, reducing the need for detailed programming language knowledge.

- Automated testing:

- Utilizes AI to automate the generation of test cases and scenarios, traditionally a time-consuming aspect of the software development lifecycle.

- Analyzes code to predict execution paths, enhancing test coverage and helping developers identify and resolve potential issues early.

Synthetic data generation

Synthetic data generation involves using AI algorithms to create artificial data sets that mimic the statistical properties of real-world data. This data is generated from scratch or based on existing data but does not directly replicate the original samples, thereby preserving confidentiality and privacy. This capability is useful in:

- Training AI models: Synthetic data can be used to train machine learning models in situations where collecting real-world data is impractical, expensive, or privacy-sensitive.

- Data privacy: Organizations can use synthetic data to enable data sharing or testing without exposing actual customer data, thus adhering to privacy regulations like GDPR.

- Testing and Quality Assurance: Software developers use synthetic data to test new applications, ensuring robustness and functionality across diverse scenarios that might not be available in real datasets.

Enterprise search

In recent times, one of the most transformative use cases of generative AI is its application in enterprise search systems. As businesses accumulate vast repositories of digital documents, finding relevant information becomes challenging. Generative AI offers a powerful solution by enhancing the capability, speed, and accuracy of search functions within an organization. Generative AI models can be trained to comprehend and analyze extensive collections of organizational documents such as contracts, internal reports, financial analyses, etc. These AI systems go beyond traditional keyword-based search technologies by understanding the context and semantics of user queries, thereby delivering more accurate and relevant results.

- Document summarization: AI can automatically identify and highlight key sections of documents. This is particularly useful for lengthy reports or contracts where decision-makers need to quickly understand essential content without reading the entire text.

- Contextual retrieval: Unlike basic search tools that return documents based on the presence of specific words, generative AI understands the query’s context, allowing it to fetch documents that are conceptually related, even if they do not contain the exact query terms.

- Trend analysis and insights: By aggregating and analyzing content across documents, AI can help identify trends and provide insights that are not immediately obvious, aiding in strategic decision-making.

Chatbot performance improvement

Generative AI plays a crucial role in enhancing the capabilities and performance of chatbots, making interactions between chatbots and users more engaging and effective. This improvement is driven primarily by generative models and natural language processing (NLP) advancements. Here’s how generative AI is enhancing chatbot performance:

- NLU enhancement: Generative AI models improve chatbots’ Natural Language Understanding (NLU) by training on extensive text data to grasp complex language patterns, contexts, and nuances, enhancing their ability to comprehend user inputs accurately.

- Human-like response generation: Generative AI enables chatbots to generate responses that mimic human conversation by training on diverse dialogues, allowing them to produce natural and tailored responses.

- Handling open-ended prompts: Chatbots equipped with generative AI can manage open-ended questions and unfamiliar topics by training on broad conversational datasets, enabling them to formulate plausible answers to a wide array of queries.

- User profiling: Generative AI aids chatbots in creating detailed user profiles by analyzing past interactions to discern preferences and behaviors, which helps personalize responses and enhance user engagement.

The landscape of generative AI applications is vast, encompassing a myriad of possibilities. The examples provided here offer just a snapshot of the most common and widely recognized use cases in this expansive and dynamic field.

How can you leverage generative AI technology for building robust solutions?

Generative AI technology is a rapidly growing field that offers a range of powerful solutions for various industries. By leveraging this technology, you can create robust and innovative solutions based on your industry that can help you to stay ahead of the competition. Here are some of the areas of implementation:

Automated custom software engineering

Generative AI is reforming automated software engineering; leading the way are startups like GitHub’s CoPilot and Debuild, which use OpenAI’s GPT-3 and Codex to streamline coding processes and allow users to design and deploy web applications using their voice. Debuild’s open-source engine even lets users develop complex apps from just a few lines of commands. With AI-generated engineering designs, test cases, and automation, companies can develop digital solutions faster and more cost-effectively than ever before.

Automated custom software engineering using generative AI involves using machine learning models to generate code and automate software development processes. This technology streamlines coding, generates engineering designs, creates test cases, and test automation, thereby reducing the costs and time associated with software development.

One way generative AI is used in automated custom software engineering is through the use of natural language processing (NLP) and machine learning models, such as GPT-3 and Codex. These models can be used to understand and interpret natural language instructions and generate corresponding code to automate software development tasks. Another way generative AI is used is through the use of automated machine learning (AutoML) tools. AutoML can be used to automatically generate models for specific tasks, such as classification or regression, without requiring manual configuration or tuning. This can help reduce the time and resources needed for software development.

Content generation with management

Generative AI is redefining digital content creation by enabling businesses to quickly and efficiently generate high-quality content using intelligent bots. There are numerous use cases for autonomous content generation, including creating better-performing digital ads, producing optimized copy for websites and apps, and quickly generating content for marketing pitches. By leveraging AI algorithms, businesses can optimize their ad creative and messaging to engage with potential customers, tailor their copy to readers’ needs, reduce research time, and generate persuasive copy and targeted messaging. Autonomous content generation is a powerful tool for any business, allowing them to create high-quality content faster and more efficiently than ever before while augmenting human creativity.

Omneky, Grammarly, DeepL, and Hypotenuse are leading services in the AI-powered content generation space. Omneky uses deep learning to customize advertising creatives across digital platforms, creating ads with a higher probability of increasing sales. Grammarly offers an AI-powered writing assistant for basic grammar, spelling corrections, and stylistic advice. DeepL is a natural language processing platform that generates optimized copy for any project with its unique language understanding capabilities. Hypotenuse automates the process of creating product descriptions, blog articles, and advertising captions using AI-driven algorithms to create high-quality content in a fraction of the time it would typically take to write manually.

Marketing and customer experience

Generative AI transforms marketing and customer experience by enabling businesses to create personalized and tailored content at scale. With the help of AI-powered tools, businesses can generate high-quality content quickly and efficiently, saving time and resources. Autonomous content generation can be used for various marketing campaigns, copywriting, true personalization, assessing user insights, and creating high-quality user content quickly. This can include blog articles, ad captions, product descriptions, and more. AI-powered startups such as Kore.ai, Copy.ai, Jasper, and Andi are using generative AI models to create contextual content tailored to the needs of their customers. These platforms simplify virtual assistant development, generate marketing materials, provide conversational search engines, and help businesses save time and increase conversion rates.

Healthcare

Generative AI is transforming the healthcare industry by accelerating the drug discovery process, improving cancer diagnosis, assisting with diagnostically challenging tasks, and even supporting day-to-day medical tasks. Here are some examples:

- Mini protein drug discovery and development: Ordaos Bio uses its proprietary AI engine to accelerate the mini protein drug discovery process by uncovering critical patterns in drug discovery.

- Cancer diagnostics: Paige AI has developed generative models to assist with cancer diagnostics, creating more accurate algorithms and increasing the accuracy of diagnosis.

- Diagnostically challenging tasks: Ansible Health utilizes its ChatGPT program for functions that would otherwise be difficult for humans, such as diagnostically challenging tasks.

- Day-to-day medical tasks: AI technology can include additional data such as vocal tone, body language, and facial expressions to determine a patient’s condition, leading to quicker and more accurate diagnoses for medical professionals.

- Antibody therapeutics: Absci Corporation uses machine learning to predict antibodies’ specificity, structure, and binding energy for faster and more efficient development of therapeutic antibodies.

Generative AI is also being used for day-to-day medical tasks, such as wellness checks and general practitioner tasks, with the help of additional data, such as vocal tone, body language, and facial expressions, to determine a patient’s condition.

Product design and development

Generative AI is transforming product design and development by providing innovative solutions that are too complex for humans to create. It can help automate data analysis and identify trends in customer behavior and preferences to inform product design. Furthermore, generative AI technology allows for virtual simulations of products to improve design accuracy, solve complex problems more efficiently, and speed up the research and development process. Startups such as Uizard, Ideeza, and Neural Concept provide AI-powered platforms that help optimize product engineering and improve R&D cycles. Uizard allows teams to create interactive user interfaces quickly, Ideeza helps identify optimal therapeutic antibodies for drug development, and Neural Concept provides deep-learning algorithms for enhanced engineering to optimize product performance.

Why build a generative AI solution?

In the competitive business landscape, staying ahead requires innovation and efficiency. Generative AI offers a transformative approach to achieving these goals. Here are several compelling reasons why building a generative AI solution can be transformative for your organization:

1. Increased efficiency

Generative AI excels at streamlining business operations and processes. By automating repetitive and time-consuming tasks, it frees up valuable resources, allowing your team to focus on more strategic and productive work. This boost in efficiency can lead to significant improvements in overall operational performance.

2. Enhanced creativity

One of the standout benefits of generative AI is its ability to foster creativity. By generating unique ideas and solutions that might not have been considered by human teams, generative AI can drive innovation. This can result in the development of advanced products, services, and strategies, giving your organization a competitive edge.

3. Boosted productivity

Automation is a key strength of generative AI, enabling businesses to handle more tasks and projects simultaneously. This increased productivity can lead to faster project completion times and the ability to scale operations more effectively. With generative AI, your organization can achieve more in less time.

4. Cost reduction

By automating processes traditionally handled by human labor, generative AI can lead to substantial cost savings. This reduction in labor costs can significantly impact your bottom line, allowing you to allocate resources more efficiently and invest in other critical areas of your business.

5. Improved decision-making

Generative AI is adept at analyzing vast amounts of data quickly and accurately. This capability supports better decision-making by providing actionable insights based on comprehensive data analysis. With generative AI, your organization can make informed decisions that drive strategic success.

6. Personalized customer experiences

Understanding individual customer preferences and behaviors is crucial for delivering personalized experiences. Generative AI can help your organization achieve this by tailoring offerings to meet the specific needs of each customer. This personalization enhances customer satisfaction and loyalty, leading to improved customer retention and business growth.

Building a generative AI solution is a strategic move that can drive significant benefits for organizations. From increasing efficiency and productivity to enhancing creativity and decision-making, generative AI offers a wide range of advantages. By integrating this technology into their operations, businesses can reduce costs, deliver personalized experiences, and stay ahead in a competitive landscape. Embracing generative AI is not just about keeping up with technological advancements; it’s about transforming how organizations innovate, operate, and grow.

The right tech stack to build a generative AI solution

Building a generative AI solution involves using a robust and versatile tech stack to ensure efficiency, scalability, and high performance. Here’s a comprehensive guide to the right tools and technologies required to build an efficient generative AI solution:

| Category | Tools and Technologies | Reason to Choose |

|---|---|---|

| Programming Language | Python | Widely used for AI and ML due to its simplicity and extensive library support. |

| Deep Learning Framework | TensorFlow, PyTorch | Provide extensive tools for building and training neural networks, known for scalability and flexibility. |

| Generative Model Architectures | GANs (Generative Adversarial Networks), VAEs (Variational Autoencoders) | Essential for creating complex generative models capable of producing high-quality outputs. |

| Data Processing | NumPy, Pandas, spaCy, NLTK | Facilitate efficient data manipulation and preprocessing, crucial for preparing data for GenAI models. |

| GPU Acceleration | NVIDIA CUDA, cuDNN | Enable high-performance computations necessary for training deep learning models. |

| Cloud Services | AWS, Azure, Google Cloud, IBM Cloud | Provide scalable and flexible infrastructure for deploying and managing GenAI solutions. |

| Model Deployment | TensorFlow Serving, PyTorch, Docker, Kubernetes, Flask, FastAPI | Support scalable and reliable deployment of AI models into production environments. |

| Web Framework | Flask, FastAPI, Django | Facilitate the development of web applications and APIs for integrating GenAI models. |

| Database | MongoDB, PostgreSQL | Offer robust data storage solutions capable of handling large volumes of structured and unstructured data. |

| Automated Testing | PyTest | Ensure the reliability and accuracy of GenAI models through automated testing. |

| Visualization | Matplotlib, Seaborn, Plotly | Enable the visualization of data and model results, aiding in the interpretation and analysis of AI outputs. |

| Experiment Tracking | TensorBoard, MLflow | Provide tools for tracking experiments, visualizing performance metrics, and managing model versions. |

| Image Processing | OpenCV, PIL | Crucial for processing and analyzing visual data, crucial for tasks involving image generation and manipulation. |

| Version Control | GitHub, GitLab | Facilitate collaboration, version control, and continuous integration in AI projects. |

Considerations for choosing the right architecture for building generative AI solutions

Selecting the appropriate AI architecture is pivotal when developing generative AI solutions. The right choice ensures that your model performs optimally, delivering accurate and efficient results tailored to your specific needs. Here are key considerations to guide you in choosing the right architecture:

1. Nature of the input data

- Sequential data: For tasks involving sequential data such as text, speech, or time series, Recurrent Neural Networks (RNNs) are ideal. RNNs excel at processing and modeling data where the order and context from previous time steps are crucial. Variants like Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) networks are particularly useful for managing long-term dependencies and mitigating issues such as vanishing gradients.

- Spatial data: When dealing with image data or tasks requiring spatial pattern recognition, Convolutional Neural Networks (CNNs) are the go-to choice. CNNs are designed to capture spatial hierarchies and patterns within images through layers of convolutions, pooling, and non-linear activation functions. They are highly effective for applications such as image classification, object detection, and image generation.

2. Complexity of the task

- Simple tasks: For straightforward generative tasks, simpler architectures may suffice. These might include basic RNNs for text generation or shallow CNNs for image processing.

- Complex tasks: More intricate tasks that require a deeper understanding of patterns and features may necessitate advanced architectures. For instance, generating high-resolution images or sophisticated text might require deeper CNNs or advanced RNN variants like LSTM and GRU. The complexity of the architecture should align with the complexity of the task to capture nuanced details effectively.

3. Availability of computational resources

- Resource constraints: If computational resources are limited, opting for architectures that require less computational power is essential. Shallower CNNs or simpler RNN configurations can be effective without demanding extensive computational resources. Considerations should also include the efficiency of the model during inference and deployment.

- High-resource environments: When resources are ample, leveraging more complex architectures is feasible. This could involve deeper CNNs with multiple layers for detailed feature extraction or sophisticated RNNs with enhanced memory capabilities. High computational power allows for experimenting with more advanced models and larger datasets.

4. Interpretability requirements

- Interpretability needs: In scenarios where understanding the model’s decision-making process is crucial, the interpretability of the architecture becomes a key factor. RNNs, with their sequential data processing nature, can often be more interpretable in tasks like text generation, where tracking the influence of previous inputs is valuable.

- Complex models: While CNNs are powerful for spatial data tasks, they can be less interpretable due to their complex feature extraction processes. Techniques such as visualization of activation maps and saliency maps can aid in interpreting CNN models, but these might not be as straightforward as understanding RNN models.

Choosing the right AI architecture for generative AI solutions involves balancing various factors, including the nature of the input data, task complexity, available computational resources, and interpretability needs. By aligning these considerations with the specific requirements of your project, you can select an architecture that performs effectively and fits within your operational constraints. Careful evaluation and selection ensure the development of robust and efficient generative AI solutions that meet your objectives and deliver high-quality results.

How to build a generative AI solution? A step-by-step guide

Building a generative AI solution requires a deep understanding of both the technology and the specific problem it aims to solve. It involves designing and training AI models to generate novel outputs based on input data, often optimizing a specific metric. Several key steps must be performed to build a successful generative AI solution, including defining the problem, collecting and preprocessing data, selecting appropriate algorithms and models, training and fine-tuning the models, and deploying the solution in a real-world context. Let us dive into the process.

Step 1: Defining the problem and objective setting

Every technological endeavor begins with identifying a challenge or need. In the context of generative AI, it’s paramount to comprehend the problem to be addressed and the desired outputs. A deep understanding of the specific technology and its capabilities is equally crucial, as it sets the foundation for the rest of the journey.

- Understanding the challenge: Any generative AI project begins with a clear problem definition. It’s essential first to articulate the exact nature of the problem. Are we trying to generate novel text in a particular style? Do we want a model that creates new images considering specific constraints? Or perhaps the challenge is to simulate certain types of music or sounds. Each of these problems requires a different approach and different types of data.

- Detailing the desired outputs: Once the overarching problem is defined, it’s time to drill down into specifics. If the challenge revolves around text, what language or languages will the model work with? What resolution or aspect ratio are we aiming for if it’s about images? What about color schemes or artistic styles? The granularity of your expected output can dictate the complexity of the model and the depth of data it requires.

- Technological deep dive: With a clear picture of the problem and desired outcomes, it’s necessary to delve into the underlying technology. This means understanding the mechanics of the neural networks at play, particularly the architecture best suited for the task. For instance, if the AI aims to generate images, a Convolutional Neural Network (CNN) might be more appropriate, whereas Recurrent Neural Networks (RNNs) or Transformer-based models like GPT and BERT are better suited for sequential data like text.

- Capabilities and limitations: Understanding the capabilities of the chosen technology is just as crucial as understanding its limitations. For instance, while GPT-3 may be exceptional at generating coherent and diverse text over short spans, it might struggle to maintain consistency in longer narratives. Knowing these nuances helps set realistic expectations and devise strategies to overcome potential shortcomings.

- Setting quantitative metrics: Finally, a tangible measure of success is crucial. Define metrics that will be used to evaluate the performance of the model. For text, this could involve metrics like BLEU or ROUGE scores, which measure the coherence and relevance of generated content. For images, metrics such as Inception Score or Frechet Inception Distance can gauge the quality and diversity of generated images.

Step 2: Data collection and management

Before training an AI model, one needs data and lots of it. This process entails gathering vast datasets and ensuring their relevance and quality. Data should be sourced from diverse sources, curated for accuracy, and stripped of any copyrighted or sensitive content. Additionally, to ensure compliance and ethical considerations, one must be aware of regional or country-specific rules and regulations regarding data usage.

Key steps include:

- Sourcing the data: Building a generative AI solution starts with identifying the right data sources. Depending on the problem at hand, data can come from databases, web scraping, sensor outputs, APIs, custom collections offering a range of diverse examples or even proprietary datasets. The choice of data source often determines the quality and authenticity of the data, which in turn impacts the final performance of the AI model.

- Diversity and volume: Generative models thrive on vast and varied data. The more diverse the dataset, the better the model will generate diverse outputs. This involves collecting data across different scenarios, conditions, environments, and modalities. For instance, if one is training a model to generate images of objects, the dataset should ideally contain pictures of these objects taken under various lighting conditions, from different angles, and against different backgrounds.

- Data quality and relevance: A model is only as good as the data it’s trained on. Ensuring data relevance means that the collected data accurately represents the kind of tasks the model will eventually perform. Data quality is paramount; noisy, incorrect, or low-quality data can significantly degrade model performance and even introduce biases.

- Data cleaning and preprocessing: It often requires cleaning and preprocessing before feeding data into a model. This step can include handling missing values, removing duplicates, eliminating outliers, and other tasks that ensure data integrity. Additionally, some generative models require data in specific formats, such as tokenized sentences for text or normalized pixel values for images.

- Handling copyrighted and sensitive information: With vast data collection, there’s always a risk of inadvertently collecting copyrighted or sensitive information. Automated filtering tools and manual audits can help identify and eliminate such data, ensuring legal and ethical compliance.

- Ethical considerations and compliance: Data privacy laws, such as GDPR in Europe or CCPA in California, impose strict guidelines on data collection, storage, and usage. Before using any data, it’s essential to ensure that all permissions are in place and that the data collection processes adhere to regional and international standards. This might include anonymizing personal data, allowing users to opt out of data collection, and ensuring data encryption and secure storage.

- Data versioning and management: As the model evolves and gets refined over time, the data used for its training might also change. Implementing data versioning solutions, like DVC or other data management tools, can help keep track of various data versions, ensuring reproducibility and systematic model development.

Step 3: Data processing and labeling

Once data is collected, it must be refined and ready for the training. This means cleaning the data to eliminate errors, normalizing it to a standard scale, and augmenting the dataset to improve its richness and depth. Beyond these steps, data labeling is essential. This involves manually annotating or categorizing data to facilitate more effective AI learning.

- Data cleaning: Before data can be used for model training, it must be devoid of inconsistencies, missing values, and errors. Data cleaning tools, such as pandas in Python, allow for handling missing data, identifying and removing outliers, and ensuring the integrity of the dataset. For text data, cleaning might also involve removing special characters, correcting spelling errors, or even handling emojis.

- Normalization and standardization: Data often comes in varying scales and ranges. Data needs to be normalized or standardized to ensure that one feature doesn’t unduly influence the model due to its scale. Normalization typically scales features to a range between 0 and 1, while standardization rescales features with a mean of 0 and a standard deviation of 1. Techniques such as Min-Max Scaling or Z-score normalization are commonly employed.

- Data augmentation: For models, especially those in the field of computer vision, data augmentation is a game-changer. It artificially increases the size of the training dataset by applying various transformations like rotations, translations, zooming, or even color variations. For text data, augmentation might involve synonym replacement, back translation, or sentence shuffling. Augmentation not only improves model robustness but also prevents overfitting by introducing variability.

- Feature extraction and engineering: Often, raw data isn’t directly fed into AI models. Features, which are individual measurable properties of the data, need to be extracted. For images, this might involve extracting edge patterns or color histograms. For text, this can mean tokenization, stemming, or using embeddings like Word2Vec or BERT.For audio data, spectral features such as Mel-frequency cepstral coefficients (MFCCs) are extracted for voice recognition and music analysis. Feature engineering enhances the predictive power of the data, making models more efficient.

- Data splitting: The collected data is generally divided into training, validation, and test datasets.This approach allows for effective fine-tuning without overfitting, enables hyperparameter adjustments during validation, and ensures the model’s generalizability and performance stability are assessed through testing on unseen data.

- Data labeling: Data needs to be labeled for many AI tasks, especially supervised learning. This involves annotating the data with correct answers or categories. For instance, images might be labeled with what they depict, or text data might be labeled with sentiment. Manual labeling can be time-consuming and is often outsourced to platforms like Amazon Mechanical Turk. Semi-automated methods, where AI pre-labels and humans verify, are also becoming popular. Label quality is paramount; errors in labels can significantly degrade model performance.

- Ensuring data consistency: It’s essential to ensure chronological consistency, especially when dealing with time-series data or sequences. This might involve sorting, timestamp synchronization, or even filling gaps using interpolation methods.

- Embeddings and transformations: Especially in the case of text data, converting words into vectors (known as embeddings) is crucial. Pre-trained embeddings like GloVe, FastText, or transformer-based methods like BERT provide dense vector representations, capturing semantic meanings.

Step 4: Choosing a foundational model

With data prepared, it’s time to select a foundational model, be it GPT-4, LLaMA-3, Mistral, Google Gemini. These models serve as a starting point upon which additional training and fine-tuning are conducted, tailored to the specific problem.

Understanding foundational models: Foundational models are large-scale pre-trained models resulting from training on vast datasets. They capture a wide array of patterns, structures, and even work knowledge. By starting with these models, developers can leverage the inherent capabilities and further fine-tune them for specific tasks, saving significant time and computational resources.

Factors to consider when choosing a foundational model:

- Task specificity: Depending on the specific generative task, one model might be more appropriate than another. For instance:

- GPT (Generative Pre-trained Transformer): This is widely used for text generation tasks because it produces coherent and contextually relevant text over long passages. It’s suitable for tasks like content creation, chatbots, and even code generation.

- LLaMA: If the task revolves around multi-lingual capabilities or requires understanding across different languages, LLaMA could be a choice to consider.

- Palm2: Specifics about Palm2 would be contingent on its characteristics as of the last update. However, understanding its strengths, weaknesses, and primary use cases is crucial when choosing.

- Dataset compatibility: The foundational model’s nature should align with the data you have. For instance, a model pre-trained primarily on textual data might not be the best fit for image generation tasks.Conversely, models like DALL-E 2 are designed specifically for creative image generation based on text descriptions.

- Model size and computational requirements: Larger models like GPT-3 or GPT-4 comes with millions, or even billions, of parameters. While they offer high performance, but require considerable computational power and memory. One might opt for smaller versions or different architectures depending on the infrastructure and resources available.

- Transfer learning capability: A model’s ability to generalize from one task to another, known as transfer learning, is vital. Some models are better suited to transfer their learned knowledge to diverse tasks. For example, BERT can be fine-tuned with a relatively small amount of data to perform a wide range of language processing tasks.

- Community and ecosystem: Often, the choice of a model is influenced by the community support and tools available around it. A robust ecosystem can ease the process of implementation, fine-tuning, and deployment. Models with a strong community, like those supported by Hugging Face, benefit from extensive libraries, tools, and pre-trained models readily available for use, which can drastically reduce development time and improve efficiency.

Step 5: Fine-tuning and RAG

Fine-tuning and Retrieval-Augmented Generation (RAG) are pivotal in refining generative AI models to produce high-quality, contextually appropriate outputs.

Fine-tuning generative AI models: Fine-tuning is a crucial step to tailor a pre-trained model to specific tasks or datasets, enhancing its ability to generate relevant and nuanced outputs. Select a foundational model that closely aligns with your generative task, such as GPT for text or a CNN for images. Importantly, the model’s architecture remains largely the same, but its weights are adjusted to better reflect the new data’s peculiarities.

The fine-tuning process involves the following:

- Data preparation: Ensure your data is well-processed and formatted correctly for the task. This might include tokenization for text or normalization for images.

- Model adjustments: Modify the final layers of the model if necessary, particularly for specific output types like classifications.

- Parameter optimization: Adjust the model’s parameters, focusing on learning rates and layer-specific adjustments. Employ differential learning rates where earlier layers have smaller learning rates to retain general features, while deeper layers have higher rates to learn specific details.

- Regularization techniques: Apply techniques like dropout or weight decay to prevent overfitting, ensuring the model generalizes well to new, unseen data.

Retrieval-Augmented Generation (RAG) involves two critical phases: Retrieval and Augmented Generation.

Retrieval: In this phase the model searches through a database of organizational documents to locate information relevant to a user’s input or query. This phase employs a variety of techniques, ranging from basic keyword search to more sophisticated methods like semantic search, which interprets the underlying intent of queries to find semantically related results. Key components of the retrieval phase include:

- Semantic search: Utilizes AI and machine learning to go beyond keyword matching, understanding the semantic intent behind queries to retrieve closely related content, such as matching “tasty desserts” with “delicious sweets.”

- Embedding (Vectors): Converts text from documents and queries into vector representations using models like BERT or GloVe, allowing the system to perform semantic searches in a high-dimensional space.

- Vector database: Stores embeddings in a scalable, efficient vector database provided by vendors such as Pinecone or Weaviate and designed for fast retrieval across extensive collections of vectors.

- Document chunking: Breaks large documents into smaller, topic-specific chunks to improve the quality of retrieval, making it easier to match query-specific vectors and retrieve precise segments for generation.

Augmented generation: Once relevant information is retrieved, it’s used to augment the generative process, enabling the model to produce contextually rich responses. This is achieved using general-purpose large language models (LLMs) or task-specific models:

- Integration with LLMs: General-purpose models generate responses based on retrieved information tailored to specific prompts, such as summarizing content or answering questions.

- Task-specific models: Models designed for specific applications generate responses directly suited to specific tasks, leveraging the retrieved chunks for accurate answers.

Incorporating RAG into the development of a generative AI application involves seamlessly integrating the retrieval and generation phases. This ensures that the generative model not only produces high-quality output but does so in a way that is informed by and relevant to the specific context provided by the retrieval system. The effectiveness of an RAG system hinges on its ability to dynamically combine deep understanding from retrieved data with sophisticated generation capabilities, addressing complex user queries with precision and relevance.

Step 6: Model evaluation and refinement

After training, the AI model’s efficacy must be gauged. This evaluation measures the similarity between the AI-generated outputs and actual data. But evaluation isn’t the endpoint; refinement is a continuous process. Over time, and with more data or feedback, the model undergoes adjustments to improve its accuracy, reduce inconsistencies, and enhance its output quality.

Model evaluation: Model evaluation is a pivotal step to ascertain the model’s performance after training. This process ensures the model achieves the desired results and is reliable in varied scenarios.

- Metrics and loss functions:

- Depending on the task, various metrics can be employed. For generative tasks, metrics like Frechet Inception Distance (FID) or Inception Score can be used to quantify how generated data is similar to real data.

- For textual tasks, BLEU, ROUGE, and METEOR scores might be used to compare generated text to reference text.\

- Additionally, monitoring the loss function, which measures the difference between the predicted outputs and actual data, provides insights into the model’s convergence.

- Validation and test sets:

- Validation sets help adjust hyperparameters and monitor overfitting during the fine-tuning of pre-trained models, ensuring the modifications improve generalization rather than merely fitting the training data.

- Test sets evaluate the model’s performance on entirely new data after fine-tuning, verifying its effectiveness and generalization across different scenarios, which is crucial for assessing the real-world applicability of generative AI models.

- Qualitative analysis:

- Beyond quantitative metrics, it’s often insightful to visually or manually inspect the generated outputs. This can help identify glaring errors, biases, or inconsistencies that might not be evident in numerical evaluations.

Model refinement: Ensuring that a model performs optimally often requires iterative refinement based on evaluations and feedback.

- Hyperparameter tuning:

- Parameters like learning rate, batch size, and regularization factors can significantly influence a model’s performance. Techniques like grid search, random search, or Bayesian optimization can be employed to find the best hyperparameters.

- Architecture adjustments:

- One might consider tweaking the model’s architecture depending on the evaluation results. This could involve adding or reducing layers, changing the type of layers, or adjusting the number of neurons.

- Transfer learning and further fine-tuning:

- In some cases, it might be beneficial to leverage transfer learning by using weights from another successful model as a starting point.

- Additionally, based on feedback, the model can undergo further fine-tuning on specific subsets of data or with additional data to address specific weaknesses.

- Regularization and dropout:

- Increasing regularization or dropout rates can improve generalization if the model is overfitting. Conversely, if the model is underfitting, reducing them might be necessary.

- Feedback loop integration:

- An efficient way to refine models, especially in production environments, is to establish feedback loops where users or systems can provide feedback on generated outputs. This feedback can then be used for further training and refinement.

- Monitoring drift:

- Models in production might face data drift, where the nature of the incoming data changes over time. Monitoring for drift and refining the model accordingly ensures that the AI solution remains accurate and relevant.

- Adversarial training:

- For generative models, adversarial training, where the model is trained against an adversary aiming to find its weaknesses, can be an effective refinement method. This is especially prevalent in Generative Adversarial Networks (GANs).

While model evaluation provides a snapshot of the model’s performance, refinement is an ongoing process. It ensures that the model remains robust, accurate, and effective as the environment, data, or requirements evolve.

Step 7: Deployment and monitoring

When the model is ready, it’s time for deployment. However, deployment isn’t merely a technical exercise; it also involves ethics. Principles of transparency, fairness, and accountability must guide the release of any generative AI into the real world. Once deployed, continuous monitoring is imperative. Regular checks, feedback collection, and system metric analysis ensure that the model remains efficient, accurate, and ethically sound in diverse real-world scenarios.

- Infrastructure setup:

- Depending on the size and complexity of the model, appropriate hardware infrastructure must be selected. For large models, GPU or TPU-based systems might be needed.

- Cloud platforms like AWS, Google Cloud, and Azure offer ML deployment services, such as SageMaker, AI Platform, or Azure Machine Learning, which facilitate scaling and managing deployed models.

- Containerization:

- Container technologies like Docker can encapsulate the model and its dependencies, ensuring consistent performance across diverse environments.

- Orchestration tools such as Kubernetes can manage and scale these containers as per the demand.

- API integration:

- For easy access by applications or services, models are often deployed behind APIs using frameworks like FastAPI or Flask.

- Ethical considerations:

- Anonymization: It’s vital to anonymize inputs and outputs to preserve privacy, especially when dealing with user data.

- Bias check: Before deployment, it’s imperative to conduct thorough checks for any unintended biases the model may have imbibed during training.

- Fairness: Ensuring the model does not discriminate or produce biased results for different user groups is crucial.

- Transparency and accountability:

- Documentation: Clearly document the model’s capabilities, limitations, and expected behaviors.

- Open channels: Create mechanisms for users or stakeholders to ask questions or raise concerns.

Monitoring:

- Performance metrics:

- Monitoring tools track real-time metrics like latency, throughput, and error rates. Alarms can be set for any anomalies.

- Feedback loops:

- Establish mechanisms to gather user feedback on model outputs. This can be invaluable in identifying issues and areas for improvement.

- Model drift detection:

- Over time, the incoming data’s nature may change, causing a drift. Tools like TensorFlow Data Validation can monitor for such changes.

- User Experience (UX) monitoring:

- This is especially important for generative AI applications that interact directly with users, such as chatbots, personalized content creators, or AI-driven design tools. Understanding how users perceive and interact with these outputs can guide improvements and adaptations to better meet user needs.

- Re-training cycles:

- Based on feedback and monitored metrics, models might need periodic re-training with fresh data to maintain accuracy.

- Logging and audit trails:

- Keep detailed logs of all model predictions, especially for critical applications. This ensures traceability and accountability.

- Ethical monitoring:

- Set up systems to detect any unintended consequences or harmful behaviors of the AI. Continuously update guidelines and policies to prevent such occurrences.

- Security:

- Regularly check for vulnerabilities in the deployment infrastructure. Ensure data encryption, implement proper authentication mechanisms, and follow best security practices.

Deployment is a multifaceted process where the model is transitioned into real-world scenarios. Monitoring ensures its continuous alignment with technical requirements, user expectations, and ethical standards. Both steps require the marriage of technology and ethics to ensure the generative AI solution is functional and responsible.

Best practices for building generative AI solutions

Building generative AI solutions involve a complex process that needs careful planning, execution, and monitoring to ensure success. By following the best practices, you can increase the chances of success of your generative AI solution with desired outcomes. Here are some of the best practices for building generative AI solutions:

- Define clear objectives: Clearly define the problem you want to solve and the objectives of the generative AI solution during the design and development phase to ensure that the solution meets the desired goals.

- Gather high-quality data: Feed the model with high-quality data that is relevant to the problem you want to solve for model training. Ensure the quality of data and its relevance by cleaning and preprocessing it.

- Use appropriate algorithms: Choose appropriate algorithms for the problem you want to solve, which involves testing different algorithms to select the best-performing one.

- Create a robust and scalable architecture: Create a robust and scalable architecture to handle increased usage and demand using distributed computing, load balancing, and caching to distribute the workload across multiple servers.

- Optimize for performance: Optimize the solution for performance by using techniques such as caching, data partitioning, and asynchronous processing to improve the speed and efficiency of the solution.

- Monitor performance: Continuously monitor the solution’s performance to identify any issues or bottlenecks that may impact performance. This can involve using performance profiling tools, log analysis, and metrics monitoring.

- Ensure security and privacy: Ensure the solution is secure and protects user privacy by implementing appropriate security measures such as encryption, access control, and data anonymization.

- Test thoroughly: Thoroughly test the solution to ensure it meets the desired quality standards in various real-world scenarios and environments.

- Document the development process: Document the development process that includes code, data, and experiments used in development to ensure it is reproducible and transparent.

- Continuously improve the solution: Continuously improve the solution by incorporating user feedback, monitoring performance, and incorporating new features and capabilities.

Key strategies for developing high-performing generative AI solutions

Developing a successful generative AI solution requires careful planning and adherence to best practices. To ensure that your AI model is effective, secure, and aligned with cutting-edge advancements, consider the following key strategies:

1. Leverage high-quality, diverse data

A strong foundation in data is critical for training generative AI models:

- Diverse and representative data: Collect a broad range of examples to capture the complexity of the problem domain. This diversity helps the model generate more accurate and varied outputs.

- Data collection and preprocessing: Utilize reliable sources and ensure data is clean, normalized, and free from biases. Augmentation techniques can also enhance the dataset, improving model performance.

2. Experiment with various architectures and models

Generative AI is continuously evolving, making it important to explore different models:

- Explore different architectures: Test various generative models such as GANs (Generative Adversarial Networks), VAEs (Variational Autoencoders), and transformer-based models to find the most effective approach for your application.

- Model variations: Experiment with advanced model variations like conditional GANs or progressive growing GANs. This helps in discovering configurations that best meet your needs.

3. Continuously evaluate and fine-tune your models

Regular assessment and optimization are key to maintaining high model performance:

- Evaluation metrics: Use appropriate metrics such as inception score, FID (Fréchet Inception Distance), or user feedback to measure the quality of outputs.

- Model refinement: Based on evaluation results, regularly fine-tune hyperparameters and model settings to improve performance and address any limitations.

4. Collaborate with domain experts

Engage with experts to enhance your generative AI solution:

- Expert insights: Collaborate with domain specialists who can provide valuable insights and guide you in addressing field-specific requirements.

- Impact improvement: Their expertise can help refine the model and improve its effectiveness in real-world applications.

5. Stay informed about the latest advances

Keep abreast of new developments in the field:

- Ongoing learning: Stay updated with recent research, techniques, and tools by reading papers, attending conferences, and participating in AI communities.

- Incorporate innovations: Apply cutting-edge methodologies and technologies to keep your generative AI solution current and competitive.

6. Focus on high-impact use cases

Identify and prioritize areas where generative AI can deliver the most significant benefits:

- Targeted applications: Focus on specific aspects of your business or solutions that would gain the most from generative AI.

- Cost-effective solutions: Choose impactful upgrades over broad implementations, especially when resources are limited.

7. Ensure robust data protection and compliance

Protect user data and adhere to regulations:

- Data security: Implement strong encryption and access controls to safeguard sensitive information.

- Regulatory compliance: Follow data protection laws and best practices to build trust and avoid legal issues.

8. Develop focused GenAI solutions

Opt for models tailored to specific needs rather than generic solutions:

- Targeted models: Create smaller, specialized solutions or fine-tune existing ones to address particular business requirements.

- Resource efficiency: This approach can be more practical and cost-effective compared to training large, general-purpose models.

9. Acknowledge and address model limitations

Be transparent about the capabilities of your generative AI solution:

- Set realistic expectations: Clearly communicate any limitations or potential biases in the model’s outputs to users.

Continuous improvement: Recognize that generative AI is not perfect and focus on ongoing refinements to enhance performance.

By applying these key strategies, you can build generative AI solutions that are not only high-performing but also secure and adaptable to evolving technological advancements. This approach ensures that your GenAI solutions are effective, practical, and aligned with the latest trends in the field.

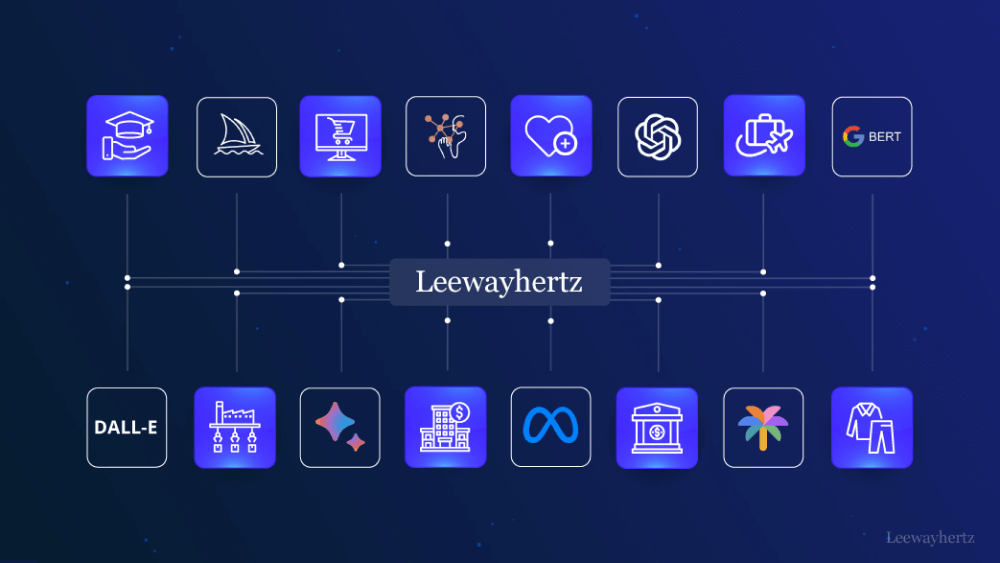

How LeewayHertz’s GenAI orchestration platform, ZBrain simplifies the GenAI solution development process?

In the rapidly evolving world of AI, enterprises are constantly seeking ways to integrate AI solutions that enhance efficiency, innovation, and data utilization. LeewayHertz’s GenAI orchestration platform, ZBrain, emerges as a transformative tool designed to simplify the development and deployment of Generative AI (GenAI) solutions. By providing a comprehensive, low-code environment tailored for enterprise needs, ZBrain streamlines the complexities associated with GenAI application development, ensuring businesses can swiftly leverage AI to meet their specific requirements.

Key features of ZBrain

1. Low-code platform: ZBrain’s low-code interface enables users to develop advanced AI applications with minimal coding knowledge. This approach democratizes AI development, allowing a broader range of users to build and customize genAI solutions efficiently.

2. Proprietary data utilization: The platform empowers businesses to harness their private data, enhancing the relevance and precision of AI applications. By leveraging proprietary data, ZBrain ensures that AI solutions are tailored to the unique needs of each organization.

3. Custom development: ZBrain supports the creation of tailored AI solutions that address unique business challenges. Its flexibility allows for customization to fit various use cases, ensuring that the platform can meet diverse enterprise requirements.

4. High-accuracy results: With built-in guardrails and hallucination controls, ZBrain guarantees precise and reliable AI outputs. This focus on accuracy minimizes errors and enhances the trustworthiness of the generated results.

5. Comprehensive solution lifecycle: From development to deployment, ZBrain covers the entire AI application lifecycle. Its extensive feature set manages all aspects of AI solution development, ensuring a seamless transition from concept to operational deployment.

6. Enterprise-ready: Designed with enterprise needs in mind, ZBrain meets the requirements for security, scalability, and integration. It seamlessly integrates with existing technology stacks and systems, providing a robust solution for complex business environments.

Comparing ZBrain and traditional custom development

- Development time: Traditional custom development can take up to six months to deliver a functional AI solution, often requiring a large team of engineers and developers. ZBrain significantly reduces this timeline with low-code interface and pre-built components that accelerate development.

- Flows management: Custom development typically involve manual and ongoing management of algorithms, which can be resource-intensive. ZBrain’s low-code platform simplifies workflow creation and management, reducing the complexity and effort required for algorithm maintenance.

- Integration and maintenance: Custom development often involves time-consuming integration and maintenance efforts, requiring specialized engineering resources. ZBrain streamlines integration with its ready-to-use APIs and reduces maintenance needs with its low-code tools and automated updates.

- Model integration: Integrating new AI models into custom solutions can be challenging and may require significant codebase changes. ZBrain simplifies model integration ,making it easier to incorporate both proprietary and open-source models.

Why choose ZBrain?

1. Efficiency : ZBrain’s low-code platform and pre-built components enable rapid development and deployment of AI solutions, reducing time, resources and accelerating innovation.

2. Flexibility: With support for a wide range of AI models and integration capabilities, ZBrain offers the flexibility to choose and switch between different models based on specific requirements. This model-agnostic approach avoids vendor lock-in and keeps options open for future advancements.

3. Security and compliance: ZBrain ensures that sensitive data is handled securely with robust privacy controls and compliance monitoring. This focus on data protection is crucial for enterprises concerned about maintaining data integrity and meeting regulatory standards.

4. Real-time validation: The platform’s continuous evaluation engine provides real-time monitoring and validation of AI applications, ensuring consistent performance and proactive issue resolution.

5. Advanced data management: ZBrain’s advanced data ingestion pipeline supports diverse data sources and formats, enabling comprehensive data integration and enhancing the accuracy of AI solutions.

LeewayHertz’s ZBrain simplifies GenAI solution development by offering a low-code, flexible, and secure platform that accelerates the creation and deployment of AI applications. Its comprehensive features and streamlined approach provide a powerful alternative to traditional custom development, making it an ideal choice for enterprises looking to harness the full potential of generative AI.

Why should you consider creating an AI impact assessment when designing a generative AI solution?

As you design a generative AI solution, one crucial step you shouldn’t overlook is conducting an AI impact assessment. This assessment is fundamental for ensuring that your generative AI project is developed responsibly, ethically, and effectively. Here’s why an AI impact assessment is essential and how it can benefit your generative AI solution:

What is an AI impact assessment?

An AI impact assessment thoroughly examines how your generative AI system will affect individuals, society, and the environment. Think of it as a “health check-up” for your AI project, aimed at understanding and mitigating potential risks while enhancing positive outcomes. This process involves answering key questions about your AI system, such as:

- How will our generative AI benefit users?

- Could it create any issues or risks?

- Is the genAI solution fair and unbiased?

- How safe is the generative AI for its users?

Addressing these questions ensures that your generative AI solution is innovative, responsible and beneficial.

Why are AI impact assessments crucial?

1. Ethical responsibility

Generative AI technologies, which create new content or data, have significant implications. An AI impact assessment helps you evaluate the ethical aspects of your AI system, ensuring it aligns with societal values and ethical standards. By considering potential ethical concerns, you can avoid unintended harm and promote fairness in your AI’s deployment.

2. Legal compliance

Many jurisdictions are implementing regulations for AI technologies, including generative models. An AI impact assessment ensures your solution adheres to these legal requirements, helping you avoid legal issues and penalties. This proactive approach safeguards your organization from regulatory breaches.

3. Risk management

Generative AI systems can introduce unique risks, such as creating misleading or harmful content. Identifying these risks early through an impact assessment allows you to develop strategies to mitigate them, protecting your reputation and conserving resources.

4. Transparency and trust

Transparency is vital for gaining user and stakeholder trust. An AI impact assessment documents your generative AI’s purpose, use cases, and potential impacts. This transparency builds trust by demonstrating that you have thoroughly considered the implications of your technology.

5. Innovation and improvement

By understanding the challenges and potential pitfalls associated with your generative AI system, you can foster innovation. The assessment encourages creative problem-solving and continuous improvement, leading to more effective and robust AI solutions.

Benefits of creating an AI impact assessment